The Big Release Roundup: IntelliJ IDEA, Quarkus, Hibernate, and much more! - JVM Weekly vol. 95

Today, we’re coming to you on a Friday due to yesterday being a public holiday for much of Europe, including Poland.

There have been several releases, so today's edition will be dedicated solely to them. I still hope you'll be satisfied, as besides the standard projects, I managed to dig up some exciting ones.

IntelliJ IDEA 2024.2

Let’s kick off our Release Radar with a bang, as the new version of IntelliJ IDEA has just been released.

IntelliJ IDEA 2024.2 brings some significant updates for JVM developers, especially those working with Spring, beyond the usual generic functionalities. To start, support for Spring Data JPA has been significantly enhanced. Now, you can run Spring Data JPA methods directly in the IDE, making it easier to test and customize repositories without leaving your coding sanctuary, which JetBrains certainly aims to make the IDE.

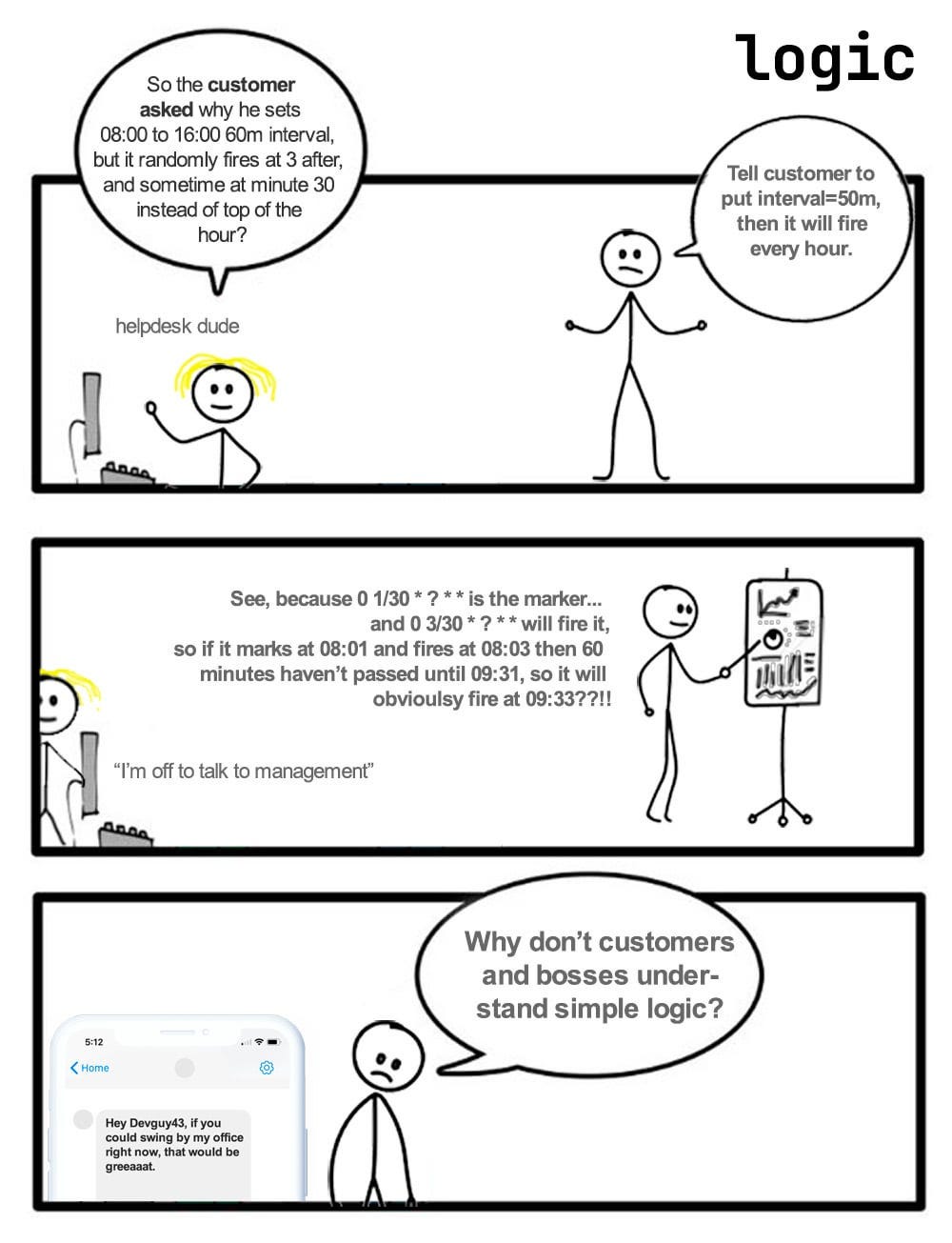

Additionally, managing cron expressions has become much simpler—let’s face it, cron expressions are "silent but deadly" sources of production errors. The cron syntax has never been the most intuitive one.

IntelliJ IDEA 2024.2 now offers inline descriptions and autocomplete with ready-made examples when configuring Spring, Quarkus, or Micronaut scheduling services.

Moreover, if you’re debugging @Scheduled methods in Spring, you can now run them in debug sessions. No more manually tweaking schedules and waiting to see if everything triggers correctly.

We also have another crossover episode. The IntelliJ HTTP client now operates based on GraalJS. Thanks to this move, the latest JavaScript standards are fully supported, and startup times are significantly faster. So if anyone ever asks what Truffle is used for, it turns out you might be using it every day.

But that’s not all. In IntelliJ IDEA 2024.2, Kotlin developers will find a new, improved K2 mode, designed to increase stability and prepare for future Kotlin language features. To clarify the terminology a bit—unlike the Kotlin K2 compiler, which is for compiling code specifically for Kotlin 2.0 or later, K2 mode in IntelliJ IDEA is about making day-to-day coding smoother and faster. It leverages the advanced capabilities of the K2 compiler to enhance code analysis, offering more reliable and faster code highlighting, autocomplete, and other key IDE features, without affecting the actual code compilation. However, note that some third-party plugins might not yet be compatible with K2 mode due to recent changes in the Kotlin plugin API.

Scala developers will also benefit from many improvements in this release. IntelliJ IDEA 2024.2 enhances support for Scala 3, improving handling of the "fewer brackets" syntax, export clauses, and extension methods. Additionally, the performance of compiler-based error highlighting has been improved, and Scala compiler diagnostics have been integrated as regular quick fixes, simplifying debugging and code enhancement processes. Code completion has also been optimized, automatically filling in all named arguments in methods and better support of union types.

From more general, but very useful changes, the 2024.2 release introduces a modernized terminal, now resembling the Warp terminal I use, bringing in the ability to generate commands with AI.

With this feature enabled, users can generate contextual commands using natural language, which are then created by the assistant and ready for execution or further editing.

However, that’s not the end of AI-related news—IntelliJ IDEA’s AI has become even smarter with GPT-4 support, now including chat references for better context. This update also introduces AI-powered VCS conflict resolution (I need to play around with this feature to fully evaluate it).

Finally, the new JetBrains UI, designed to reduce visual complexity and make key features more accessible, is now the default setting for all users.

For those who prefer the traditional look, the classic UI is still available as a plugin.

Hibernate 6.6, 7.0 Beta, and a new reactive variant

Next, let’s take a look at Hibernate, as the project has treated us to several simultaneous updates.

We’ll start with the new version of the "main" library. Hibernate 6.6 introduces several significant features, including a full implementation of the Jakarta Data 1.0 standard. Jakarta Data implementation in Hibernate 6.6 is based on StatelessSession, optimized for this technology and offering compile-time code generation using an annotation processor (if you’re not familiar with how such tools work, I recommend reading Build your own framework using a Java annotation processor by Jacek Dubikowski), ensuring strong type safety at compile time. Additionally, the introduction of the new @ConcreteProxy annotation provides better control over lazy initialization of objects, eliminating issues related to incorrect casting of inherited classes and serving as an improved replacement for the deprecated @Proxy and @LazyToOne annotations.

Another significant new feature in Hibernate 6.6 is extended array support, including the ability to map arrays of embeddable types and a new syntax for array operations and substrings. For example:

array(1, 2) -> [1, 2]

substring(stringPath, 2, 1) -> stringPath[2]Moreover, the introduction of inheritance for @Embeddable classes allows for more complex data models, where embedded classes can inherit properties from other embedded classes using a discriminator column (please let me know if anyone uses this and where). Finally, support for vector types in Oracle databases has been added, and managing @OneToMany relationships combined with @Any has been simplified, increasing flexibility in modeling complex relationships.

But that’s not all—Hibernate Reactive 2.4.0 has also been released, a tool for reactive projects based on Hibernate ORM. The new version is compatible with Hibernate ORM 6.6.0, meaning Hibernate Reactive users can benefit from all the above new features.

Simultaneously, the first beta of Hibernate 7.0 has been released, which will require Java 17 and introduces a migration to Jakarta Persistence 3.2. The new version offers more validation of the application’s domain model, increasing the accuracy and consistency of object-relational mapping. Hibernate 7.0 introduces extended XSD for Jakarta Persistence with Hibernate-specific features and moves from Hibernate Commons Annotations to the new Hibernate Models project for domain model management. Additionally, a tool has been provided to help migrate older hbm.xml formats. We just have to wait and see.

One final thought—Hibernate could do with a bit more order in its versioning. The main release is 6.6, the compatible Hibernate Reactive is 2.4, and at the same time, stable Hibernate Search is already at version 7.2.0. It would be good to get this sorted.

Quarkus 3.13

Last week, I talked about the new home of the Quarkus project; this time, we’ll discuss its latest version.

Quarkus 3.13 introduces several significant updates, including OpenTelemetry extensions that now support OpenTelemetry 1.39 and Instrumentation 2.5.0, and add support for OpenTelemetry Metrics. The documentation has been updated to reflect these changes, offering guides for OpenTelemetry Tracing and Metrics. Additionally, the TLS store introduced in Quarkus 3.12 now offers features like automatic certificate reloading and support for Kubernetes secrets and Cert-Manager. New CLI commands for managing TLS configurations have also been added.

Other noteworthy updates in Quarkus 3.13 include the introduction of pass-through HTTP proxy for REST clients in development mode, making it easier to inspect requests using tools like Wireshark, which are used for analyzing and monitoring network traffic, enabling the capture and detailed examination of data packets transmitted over computer networks.

The WebSockets Next extension (Quarkus’s own name, not a new standard—I mention this because I was initially confused) now supports suspend functions in Kotlin.

Meanwhile, ArC—the build-time oriented implementation of CDI 2.0 - Contexts and Dependency Injection specification created for Quarkus—has been updated to allow interceptors on producers and synthetic beans. Furthermore, new @WithTestResource annotations replace the deprecated @QuarkusTestResource, and a new configuration option allows disabling automatic application reloading while in development mode.

bld 2.0

After covering several major, more well-known projects, it’s time to highlight a smaller, more niche, but still interesting one.

bld is a project build system that allows writing build logic in pure Java. It was created for developers who want to focus on building applications rather than learning the abstractions and workings of a build tool, which conceptually resembles the philosophy of tools like AWS Cloud Development Kit. In bld, all build operations are imperative and explicit, without automagic actions that can automate many things but also introduce unnecessary complications. The user has full control over managing libraries, and all build logic is written in Java, allowing the use of all the advantages of the programming language, such as IDE support. bld is distributed as a single JAR file, making it easy to integrate and use.

The recently released bld 2.0 brings many improvements (as one might expect from a major version number), the most important being a new dedicated plugin for IntelliJ IDEA. This version also adds support for displaying extension dependencies in the dependency-tree command, generating POM properties, and offline mode, which blocks internet access. Error reporting, dependency version handling, and the built-in documentation system have also been improved. Version 2.0 also features better dependency cache management.

WildFly 33

It’s been a while since we’ve mentioned anything about Jakarta EE (although with the JakartaOne conference approaching, that’s likely to change soon), and here we are today for the second time (after Hibernate). That’s why I’m excited that the next item is the new version of perhaps the most interesting application server.

The main features introduced in WildFly 33 are increased flexibility and control over deployment and server configuration management. Support for overriding configurations with YAML files has been expanded, now allowing unmanaged deployments, which enables direct use of deployment contents without the server creating internal copies. Unmanaged deployments are those where the server directly uses the application’s provided content, instead of creating an internal copy within its infrastructure. This makes the deployment process more flexible, as the server uses the application files in their original location, which can be useful in specific scenarios, such as using tools that do not support managed deployments.

Other improvements, such as enhanced reverse proxy configuration and the ability to customize AJP headers, offer greater flexibility in handling network traffic and integrating with various systems. Meanwhile, support for sending encrypted authentication requests in the elytron-oidc-client subsystem increases security. Last but not least, WildFly 33’s full compliance with Jakarta EE 10 and Java SE 21 means that all developers can finally use stable versions of both projects.

Langchain4j 0.33

Everyone has been predicting that the latest AI bubble is slowly bursting, but the shovel producers are still doing well, as we have just seen another version of two projects that serve as convenient facades over various Gen AI APIs.

Let’s start with the latest version of langchain4j. langchain4j 0.33 introduces, among other things, integration with Redis, which now allows for efficient conversation memory management via the RedisChatMemoryStore structure. In addition, enum handling with the @Description annotation has been improved.

Regarding additional model support capabilities, the integration with Google Gemini has been significantly updated to now support various input types such as audio, video, and PDF. Support for Ollama has been migrated to Jackson for better JSON processing. Other noteworthy changes include support for the Titan V2 embedding model in Amazon Bedrock, API Endpoints for OVHcloud (I delved deeper into this and didn’t expect such a rich offering from this company in terms of AI, which only shows the massive commoditization), and increased embedding management capabilities in integrations with Chroma and Pinecone.

Semantic Kernel for Java 1.20

Staying on the topic of AI, let’s take a look at a solution from the competition.

The release of Semantic Kernel for Java v1.2.0 brings the first set of significant improvements and new features since the GA version released in May. The key change is the creation of a dedicated repository for the Java version, significantly increasing the potential for collaboration with external developers. The update also resolves community-reported issues from the GA release, such as chat history duplication and enhanced type conversion mechanisms. New features have also been introduced, such as support for image content in chat messages, Gemini integration, and OpenTelemetry for performance monitoring. Additionally, integration with Presidio has been added to enhance data privacy by removing sensitive information from prompts.

The release also introduces experimental features such as support for Hugging Face and vector databases with vector storage. This last feature allows for storing and retrieving business data as vector embeddings, serving as long-term semantic memory for AI applications. The release also includes (also experimental) support for Azure AI Search and Redis Vector Search, enabling developers to efficiently manage and search stored data.

Fury 0.6.0 and 0.7.0

And now it’s time to return to one of the most interesting projects of the last year, which we once dedicated the main edition topic.

There are two basic types of serialization: static and dynamic. Frameworks that use static serialization, like protobuf, operate based on a pre-established schema. Serialization is performed based on this schema, which requires both the sender and receiver to be aware of it beforehand. This approach offers speed and efficiency, but has its drawbacks, mainly a lack of flexibility—communication between different programming languages based on static data structures can be difficult, especially when schema evolution is needed. On the other hand, dynamic serialization frameworks, such as Serializable in JDK, Kryo, or Hessian, do not rely on a fixed schema. They define the data structure in real-time, making them more flexible. This translates to better user experience and the ability to use polymorphism. Unfortunately, this flexibility often comes with a performance hit, which can be an issue in situations requiring high throughput, such as large data transfers.

Fury aims to find a balance between these two worlds, combining the flexibility of dynamic serialization with the efficiency of static serialization. The framework is built to ensure full compatibility with existing Java solutions, as well as provide versions for other platforms. Fury uses a range of advanced serialization methods while supporting SIMD operations. The solution also leverages Zero-Copy techniques, which avoid physically copying data between memory buffers or other system layers, reducing latency during data transfers by eliminating unnecessary memory copying. Additionally, Fury includes a JIT compiler that uses real-time object type data to generate optimized serialization code. The framework also prioritizes efficient cache usage, maximizing CPU data and instruction cache hits, and supports multiple serialization protocols, adapting to different application needs.

The new versions of Fury introduce significant performance and functionality improvements. In version 0.6.0, a metadata sharing mode for schema evolution in Java has been added, which is now enabled by default in compatible mode. According to the developers, this mode is 50% faster than the previous KV-compliant mode and generates a serialized payload 1/6 the size of the previous one. It is also four times faster than protobuf and generates data less than half the size for complex objects. Version 0.7.0 introduces experimental support for fast deep object copying in Java, enabling easy and efficient object copying while preserving their references.

Akka 24.05

I know that after the license model change, many people have written off Akka, but it remains an interesting project that continues to surprise with its new versions. The latest release caught my attention with its intriguing features. Akka 24.05 introduces fully active-active entity replication, a feature designed to meet the needs of high-availability / low-latency applications in multi-region, multi-cloud, and edge topologies. Active-active entities are data stored simultaneously in multiple locations, so each server has a full copy. This means that no matter where you are, you can quickly access this data, and if one server goes down, another takes over without interruption.

This allows for entities to be replicated in different locations, such as the US and Europe, which are fully autonomous and capable of handling both read and write operations. As a result, Akka reduces latency for users in different regions and provides greater system resilience, as traffic can be redirected to other replicas in case of a failure in one location. The event replication mechanism has been designed to operate asynchronously, ensuring that updates are shared with all replicas even if the network connection is temporarily lost.

Akka additionally allows for dynamic control over where entities are replicated using filters that can be adjusted in real-time to optimize network traffic and memory usage. This flexibility supports complex application topologies, allowing entities to move between points of presence (PoP) or reside in selected locations depending on the application’s needs.

Valkey Glide

Smoothly transitioning from Akka, which was forked into Pekko after its license change, it’s time to look at Redis, which gave rise to the Valkey project under similar circumstances.

Valkey was created in response to Redis’s license change, which switched from the open-source BSD 3-Clause license to the Redis Source Available License (RSALv2) and Server Side Public License (SSPLv1). This decision was met with significant dissatisfaction among developers and users. As a result, members of the open-source community, supported by The Linux Foundation, decided to create a fork of Redis called Valkey. This move proved to be much more popular than expected, attracting support from giants like AWS, Google Cloud, Oracle, and Verizon, while its first release, Valkey 7.2.5 GA, based on the last open-source version of Redis 7.2.4, was well received.

Valkey GLIDE is an open-source client library created for Valkey and Redis, sponsored by AWS. It supports Valkey version 7.2 and later, as well as Redis versions 6.2, 7.0, and 7.2. Compared to the popular JEDIS, which is a Java-specific Redis client written entirely in Java, Valkey GLIDE takes a more versatile approach. While JEDIS is optimized for use in the Java ecosystem, Valkey GLIDE is designed with a broader scope, supporting both Valkey and Redis in multiple programming languages. As mentioned, the main Valkey GLIDE driver framework is written in Rust, with language-specific extensions. This allows Valkey GLIDE to provide a reliable and consistent experience not only for Java but also for other programming environments such as Python, with plans to support additional languages in the future.

Location4j

Location4j is a simple Java library designed for efficient and accurate geographical data lookup for countries, states, and cities. Interestingly, unlike other similar solutions, location4j works without using external APIs, making it both economical and fast. Its built-in data set enables quick lookups without the need for external HTTP connections, increasing application performance and reducing costs. Those who use the Google Maps API know that this is worth it.

The whole thing uses the dr5hn/countries-states-cities-database data set.

Mnemosyne

Almost at the end, we have another library from the interesting curiosities category.

Mnemosyne is a small caching library designed with simplicity and flexibility in mind. Unlike many existing libraries of this type, which are often complex and difficult to understand, Mnemosyne offers a minimalist approach without unnecessary code, redundant packages, or numerous external dependencies. It is easy to understand and modify even for less experienced developers, making it an interesting choice for those who adhere to the principle of "I don’t add dependencies to the code that I wouldn’t be able to maintain myself if needed." I know such people.

Mnemosyne is particularly easy to integrate with Spring-based applications. For applications using Spring Boot 3 or later, simply add the @Import(MnemosyneSpringConf.class) annotation in the main class and @Cached in the methods you want to cache. Mnemosyne also supports the ability to use custom caching algorithms by extending AbstractMnemosyneCache and specifying them in the @Cache annotation. Support for non-Spring-based applications is planned for the future, and the library’s creators are eager to receive feedback on its performance in other versions of Java, Spring, and JVM languages. Examples of usage show how easy it is to create a cache with annotations.

I must admit that I once had the opportunity to write such a micro-caching library, and it’s quite an interesting experience. If I had to point out one reason to take a closer look at Mnemosyne, it’s that due to its simplicity, you can learn a lot from the creator.

TennessineC

I saved TennessineC for dessert. Not everyone may be aware that the JVM is not written in Java, but in C/C++.

TennessineC is a compact C language compiler, written entirely in Java, designed specifically to create executable files for the Windows platform on i386. Despite being in its early development stage, the compiler already supports basic features such as basic FFI interface via the #pragma tenc import directive, variables, functions (though they are still in development), control structures such as "while" loops and "if" statements, and basic operations like addition and subtraction. Currently, the compiler only supports the 32-bit int data type and is not strictly compliant with any existing C language standards, making it more of a learning tool or experimental project than a full-fledged compiler.

The project was inspired by the minimalist PE101 documentation, and the developer uses tools like Tiny C Compiler for testing and Ghidra for analyzing compiled code. It seems that for those interested in compiler theory, this is a really interesting opportunity, especially since I took a look at the sources and the code is surprisingly readable.