May: The Rest of the Story - JVM Weekly vol. 131

The new edition of JVM Weekly is here and... it is nuts 🥶

Since the last few editions have been quite thematic, I’ve gathered many interesting links in May that didn’t make it into previous issues. Many aren’t extensive enough for a full section, but they still seem compelling enough to share.

And to wrap things up, I’m adding a few (this month - a lot…) of releases and updates.

1. Missed in May

Let’s start with the birthday wishes: Java just turned 30 and has entered its fourth decade with style, under Oracle’s banner. Already back in March, JavaOne 2025 adopted an anniversary theme, adding retrospective keynotes and a special “Java at 30” track. The Inside Java newsletter also covered the behind-the-scenes details and celebration plans in its May issue, encouraging the community to join in. On top of that, May 22nd saw a six-hour livestream featuring James Gosling , Brian Goetz , Mark Reinhold , and Georges Saab , hosted by Ana-Maria Mihalceanu , Billy Korando , and Nicolai Parlog.

Partners and vendors joined the toast: JetBrains launched the #Java30 campaign with a What’s your inner Duke? quiz and limited-edition T-shirts up for grabs.

JetBrains also published a “Java Good Reads” list, released a plugin that changes the IDE splash screen, and even dropped a “Java 30” TikTok duet track. And before anyone tries to be cool asking, “What even is TikTok?”, just go play that song.

But that’s not all! Here are a few more highlights:

Azul hosted its virtual event, Duke Turns 30, as early as March, gathering Java Champions for panels on the future of JVM performance.

Also in March (because who says you have to celebrate only on your actual birthday?), Payara looked back at Java’s journey, highlighting the evolution from set-top boxes to microservices in the cloud.

The community joined in too: Cyprus JUG hosted an evening of lightning talks and a Duke cake on May 23rd, and similar events popped up in the calendars of many JUGs and conferences, adding special anniversary sessions.

The upshot of all these distributed but coordinated initiatives is more than just a nostalgic tribute: joint events, open-access content, and new IDE plugins are strengthening the network of relationships that has kept the Java ecosystem vibrant for 30 years—and is preparing it for another decade of innovation.

And hey, in just ten years we’ll be celebrating Java’s 40th. Because, let’s face it, Java is forever.

And now, time to step out of party mode and get back to other topics. Let’s start with further JDK evolution.

Paul Sandoz has posted a draft for a new JSON parsing/generation API on the core-libs-dev mailing list. The idea is “batteries included”: simple programs should be able to handle the most popular data interchange format without pulling in third-party libraries. The main requirements: a clear, compact API and performance that’s “good enough”—philosophy: simplicity > ultimate possible performance.

The prototype is based on six interfaces (JsonObject, JsonArray, JsonString, JsonNumber, JsonBoolean, JsonNull) all extending a common JsonValue. The structure is immutable and immediately ready for pattern matching, letting you map a JSON tree directly to records or other model classes. The project deliberately skips data-binding and path-query layers—those are expected to be built as external tools.

The tricky topic of JSON numbers is addressed with three access methods (raw text, BigDecimal, heuristic Number), letting users pick their own precision.

The code is already live in the json branch of the jdk-sandbox repo and passes nearly all of the JSONTestSuite. In practice, the JDK team is already using it, for example, in Project Babylon, to convert ONNX schemas.

Per-Åke Minborg from Oracle has revealed that we can expect significant improvements to Strings in JDK 25. It turns out that a single small annotation on the String.hash field - marking it as @Stable - has allowed the JVM to “constant fold” most invocations of String::hashCode.

Constant folding is a classic compiler optimization technique. Its goal is simple: during compilation, if the compiler finds operations whose results can be determined at compile time, it replaces them with their computed values. When a string literal is used as a key in an immutable map and the value is also accessed during class initialization, the JIT can now see the whole thing as a constant chain of computations, skipping both hash calculation and table lookup entirely.

In the Per-Ake's micro-benchmark, the average lookup time in a static Map<String,MethodHandle> dropped from about 4.6 ns to 0.6 ns - over an eightfold speedup. This works because @Stable guarantees that after the field is set for the first time, it never changes value. Thank's to this, JIT can substitute the literal hash result, and then compress the entire access path to the method or object stored in the map. The only real exception is for strings whose hash is zero (for example, the empty string); for those, the optimization won’t kick in, although the team promises an additional workaround is on the way.

While @Stable is still an internal JDK annotation, work is already underway on JEP 502: Stable Values (Preview), which aims to make similar guarantees available to user code.

On April 30, as announced by Brian Stansberry, WildFly officially joined the Commonhaus Foundation - following in the footsteps of Quarkus, which made the same move at the end of 2024. This shift marks a significant step for both projects, as they look to modernize their governance and make it easier for new contributors to get involved. The move also signals a departure from single-sponsor dependency and a more open, community-driven model.

Commonhaus Foundation offers projects legal status, a lightweight "community-first" governance model, and funding options through GitHub Sponsors or Open Collective, without imposing technical direction or product strategy. Instead of lengthy bureaucratic processes, projects receive “minimum viable governance”: a foundation council, open voting, and support for legal and marketing issues, all while maintaining maximum autonomy for project maintainers.

Under the Commonhaus umbrella, you’ll find both libraries and full platforms: Hibernate, Jackson, Debezium, JBang, JReleaser, SDKMAN!, Feign, EasyMock, Objenesis, Morphia, as well as infrastructure projects like Infinispan, Kroxylicious, and the newly registered SlateDB.

On May 13, Azul and Moderne announced a joint initiative that combines runtime data from Azul Intelligence Cloud - especially the Code Inventory module, which detects unused classes and methods in production - with Moderne’s platform based on OpenRewrite. Thanks to this integration, a single Moderne recipe can now automatically flag and remove dead code across dozens of repositories, as well as prepare the refactorings needed for migrations to newer JDKs or frameworks.

The partners present this as a "from insight to action" model, where runtime telemetry is instantly translated into actionable pull requests, shortening modernization timelines and reducing the risk of manual errors. Jonathan Schneider from Moderne emphasizes that this is just the beginning of “production-aware” OpenRewrite recipes, while Scott Sellers from Azul adds that developers are now gaining time for real innovation instead of maintaining “zombie code,” praising the ability to clean up large codebases “in minutes, not months.”

And that’s not the only Azul partnership this month. Together with JetBrains, they announced a technical collaboration aimed at accelerating Kotlin applications from the compiler level down to the virtual machine. Azul engineers are investigating how the bytecode generated by the Kotlin compiler interacts with advanced optimizations in Falcon JIT and the pauseless C4 garbage collector. In initial tests (benchmarks alert!), migrating the same application from OpenJDK 21 to Zing reduced average latencies by around 24–28% and boosted throughput by 30–39%, demonstrating that coroutine-heavy services can genuinely benefit from more aggressive JVM optimizations.

The next stage will involve expanding the benchmark suite and jointly exploring changes to the Kotlin bytecode generator that can take even better advantage of existing and future JIT/GC mechanisms - benefiting both commercial Zing users and those on standard OpenJDK builds.

JetBrains has released its foundational Mellum-4B model on Hugging Face under the Apache 2.0 license.

In the accompanying post, Anton Semenkin and Michelle Frost described Mellum as a “focal model” - it doesn’t aim for encyclopedic knowledge, but instead focuses on a single task: code autocompletion in JetBrains IDEs. Open-sourcing the model is meant to show what a specialist model looks like when trained from scratch (not just fine-tuned). The model itself has 4 billion parameters, uses the LLaMA architecture, has a context window of 8,192 tokens, and was trained on approximately 4 trillion tokens from sources like The Stack v1/v2, CommitPack, and Wikipedia.

Open-sourcing Mellum is just the first step in a larger plan: Mellum is set to become a family of models tailored to various developer tasks—from diff prediction to documentation generation. JetBrains hopes to build an ecosystem of tools in which lightweight, domain-specific LLMs replace the monolithic, “does-everything” models.

And staying on the topic of AI: Quarkus MCP Server gained support for Streamable HTTP - a new transport defined in the Model Context Protocol (MCP) specification—making it the first Java SDK to offer this feature. In practice, starting from version 1.2 (equivalent to the Quarkiverse 1.0.0.CR4 artifacts), any MCP server built on Quarkus can now communicate not only over stdio or Server-Sent Events (SSE), but also via a single, long-lived HTTP connection. This opens the door to integrations with mobile applications and cloud services, where SSE is often blocked or inconvenient.

The streamable HTTP transport in MCP focuses on low-overhead, bidirectional data streaming, which is well-suited for real-time scenarios—from chat assistants to developer tool servers. Advanced features from the specification, such as stream resumption and redelivery, are planned for future releases.

Oracle has released the genai-langgraph-graalpy repository as a reference microdeployment showing how to “embed” the Python agent framework LangGraph into a JVM-based microservice.

The whole solution is built on Helidon MP: REST requests go to Java code, but the actual orchestration of multi-actor LLM flows is handled by a Python script running in the same virtual machine, thanks to GraalPy, the Python implementation on GraalVM. This architecture allows you to combine the best of both worlds - the performance and operational ecosystem of Java with Python’s rich AI libraries - without the need for network communication between processes.

The key mechanism is GraalVM’s polyglot API, which passes Java records to the Python environment and back, without copying. As a result, you can call OCI models, run utility functions, or store results in a database, all within a single JVM process.

The repo includes a ready-to-use pom.xml, a Dockerfile for the JVM image (as well as a native-image variant), a sample agent integrated with Oracle Cloud Infrastructure Generative AI, and documentation covering all steps: compiling Java, installing Python dependencies, building the image, and running it locally or on Kubernetes.

On May 20, Jason Konicki fro Project Reactor team announced that they are ending development of Reactor Kafka. They justified the decision by pointing to declining adoption and a desire to focus their efforts on parts of the ecosystem that remain critical to the community, such as Reactor Core and Reactor Netty.

In practical terms, this means the library will disappear from future versions of the Reactor BOM, and version 1.3 will be the last functional release; only critical fixes will be provided until the end of the support cycle. At the same time, the Spring Cloud Stream Reactor Kafka Binder and reactive templates in Spring for Apache Kafka have been marked as deprecated and scheduled for removal.

The maintainers emphasize that discontinuing Reactor Kafka does not affect long-term support for reactive programming in Spring. However, users should plan for migration - for example, switching to the classic Apache Kafka client with Flux/Mono in the application, or to Spring Kafka in imperative mode - before the library is removed from maintained artifacts.

Eh.... when you’ve been running behind all month (like I was in May), it turns out the “Missed in Month” section gets surprisingly long…

But let’s get to the releases—there’s no shortage of interesting announcements here, either. We will start with Spring!

2. Release Radar

Spring Boot 3.5.0

Spring Boot 3.5.0, announced on Spring I/O last week, is the first release introducing comprehensive support for Java 17 and Jakarta EE 10, meaning it fully embraces the features and standards of these platforms. However, it does not increase the minimum required versions for Java or Jakarta EE.

The most visible new features include the ability to load entire property bundles from a single environment variable (spring.config.import=env:MY_CONF), new annotations (@ServletRegistration and @FilterRegistration) replacing the need to write *RegistrationBean classes by hand, and enhanced support for structured logging: you can now limit or format stack traces, and ECS-format JSON logs now use a nested structure that’s better accepted by typical log backends.

Under the hood, there are several improvements for code and configuration: if a custom Executor exists in the context, you can simply set spring.task.execution.mode=force to switch the entire @Async mechanism to use the auto-configured AsyncTaskExecutor. Automatic background initialization (bootstrapExecutor) also turns on with no extra step as long as you have the default applicationTaskExecutor. For the scheduler, you can now globally attach a TaskDecorator, and web client creators get new properties for setting timeouts and redirects, plus a new ClientHttpConnectorBuilder interface for more advanced modifications.

The operational layer gains native SSL support for Cassandra, Couchbase, Elasticsearch, Kafka, MongoDB, RabbitMQ, and Redis connections (with Testcontainers and Docker Compose recognizing new labels), while Actuator now publishes certificate chain metrics and allows you to trigger a Quartz job via POST at /actuator/quartz/jobs/{group}/{job}.

For the full release notes, go there.

However, that is not the only big Spring-related release.

Spring AI 1.0

On May 20, Spring AI 1.0 was released after two years of development and eight milestone iterations, delivering a stable API that aligns with familiar Spring patterns. The framework connects with leading LLM providers - including OpenAI, Anthropic, Azure OpenAI, Amazon Bedrock, and Google Vertex AI - and supports various model types such as chat completion, embeddings, image generation, and audio transcription.

A standout feature is its support for Retrieval-Augmented Generation (RAG). The unified Vector Store API integrates with databases like Cassandra, PostgreSQL with PGVector, MongoDB Atlas, Milvus, Pinecone, and Redis, enabling LLMs to ground their responses in enterprise data. The framework also maps model outputs directly to POJOs, offers tools/function calling capabilities, and is the first in the Spring ecosystem to implement the Model Context Protocol (MCP), providing ready-to-use Spring Boot starters for both client and server sides.

Getting started is straightforward with Spring Initializr and the appropriate starter. Here you will find tutorial by Josh Long.

The release was accompanied by Google Cloud publication, co-written by Josh and Dan Dobrin, presenting how to use project with Google's own models.

Langchain4j 1.0

After two years of tinkering with the code, the LangChain4j team has decided it’s time to say goodbye to alphas and betas: on May 14, they released version 1.0.0 for the key artifacts (core, main library, two HTTP clients, and the OpenAI module), along with a unified langchain4j-bom. Settling on a single, stable version number and publishing to Maven Central were the first steps toward declaring the API "frozen" according to semver rules - the rest of the ecosystem (like Bedrock and Vertex AI integrations) remains in “-beta” for now, but the foundation is now rock solid.

Stabilization required a thorough API cleanup: all classes and methods marked as deprecated have been removed, collections returned by the library are now non-null, and core interfaces have received their final names (ChatLanguageModel → ChatModel, Tokenizer → TokenCountEstimator). Internal helper classes are now annotated with @Internal, exception mapping in the OpenAI and Ollama modules has been refined, and Kotlin extensions have been moved into a separate module to keep the public API distinct from syntactic sugar. This means the contract is clear, and future releases shouldn’t force users to refactor their code anymore.

What's interesting, to make migration painless, the authors have provided a ready-made OpenRewrite recipe that automatically swaps old names and removes legacy leftovers, while langchain4j-bom keeps all module versions in sync. As a result, 1.0 doesn’t mean “development stops here” - rather, it marks the beginning of a predictable cycle, where new features (like MCP, support for more models or tools) will land on a stable base without risking that tomorrow your build suddenly breaks.

Agent Development Kit for Java (ADK) 0.1.0

Staying in ther realm of AI-World: At Google I/O 2025, Google announced the Agent Development Kit for Java (ADK) version 0.1.0 - the first official release that brings full-fledged, “code-first”, Google-way agent development to Java 17+. Java developers now get the same capabilities that were previously available only to Python users.

ADK-Java allows you to define individual LLM agents and complex flows (Sequential, Parallel, Loop) directly in code, while the built-in Dev UI - launched from the command line - lets you test token streaming for text, voice, and video even before your first commit. The sample ScienceTeacherAgent is up and running in just a few lines of code and a mvn exec:java command, demonstrating live how the agent responds in a browser or via microphone.

Completed agents can later be embedded in Vertex AI Agent Engine, Cloud Run, or GKE. The framework also offers an Agent2Agent protocol, built-in tools (from search to OpenAPI), event buses, and an evaluation module. Everything is open source under the Apache 2.0 license, so you can contribute to the project with the community - or simply clone the repo and start building your own multi-agent backend right away.

There are already first tutorials published how to use ADK for Java - worth to check it. You can also find some samples there.

GitHub Copilot App Modernization for Java

Nick Zhu informed that GitHub Copilot App Modernization for Java - an extension pack for VS Code that turns the tedious “upgrade marathon” into a shortcut sprint with a single click - is now in public preview. As announced at Microsoft Build, you can now install this all-in-one Extension Pack and get an AI navigator that analyzes your project, lays out a modernization plan, and immediately suggests specific fixes. Everything is powered by AppCAT (Application Compatibility Assessment Toolkit) - a tool and framework developed by Microsoft for automatically analyzing application compatibility and identifying modernization needs.

The transformation engine acts like an automated surgeon: it uses ready-made “recipes” (for things like secrets, queues, identity) or patterns extracted from commit histories and, with OpenRewrite (here we go again), performs mass code refactoring, fixes compilation errors, and runs tests to make sure everything is green.

If anything still complains after the operation, Copilot collects logs and keeps fixing until your build passes smoothly. For dessert, the tool scans your freshly polished codebase for CVEs and inconsistencies, automatically patches them, and possibly generates IaC files, spins up CI/CD, or even deploys and runs the app to Azure.

And talking about running an app on Azure, there is a new tool for that!

jaz - Azure Command Launcher for Java

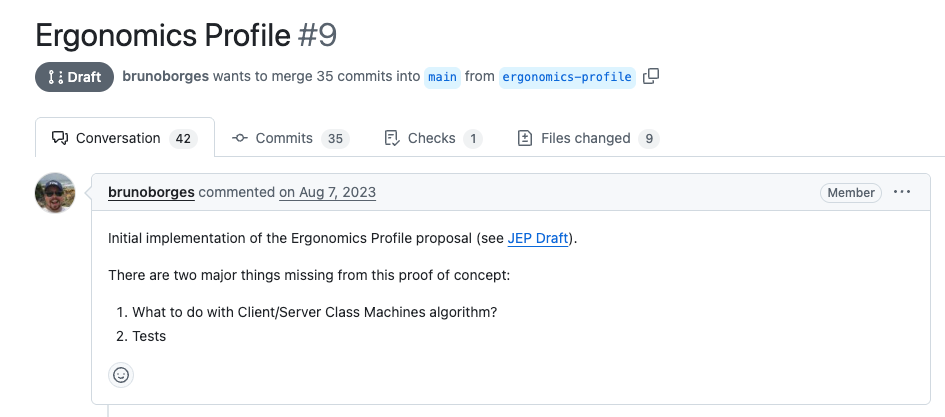

Also during buid, Microsoft and Bruno Borges unveiled Azure Command Launcher for Java, known as "jaz" - its own cloud-native JVM launcher that sits between your startup command and java, automatically optimizing default flags for containers and VMs running on Azure. It supports JDK 8, 11, 17, and 21, and is based on the idea that most teams rarely tweak JVM parameters, causing applications to waste memory and CPU on HotSpot’s defensive defaults.

jaz detects container limits and workload types, then serves up an optimized set of flags: it adjusts heap size, selects the right GC, enables diagnostics, and uses AppCDS - with future plans to integrate with Project Leyden. As a result, you can replace long JAVA_OPTS like -XX:… with a simple jaz -jar app.jar and immediately reduce memory usage and speed up startup, without diving into JVM tuning guides.

The tool is currently in private preview; after requesting access, you receive DEB/RPM packages for Microsoft Build of OpenJDK and Eclipse Temurin images, as well as direct contact with the team, which is planning adaptive profiles based on telemetry, full AppCDS/Leyden support, and metrics export to Prometheus.

This initiative is a natural move for Microsoft, as they have previously proposed the concept of Ergonomics Profiles for the JVM years ago, which (from my perspective) was a similar concept to what jaz brings, but one generic for OpenJDK.

Vert.x 5.0

Eclipse Vert.x is a toolkit for building reactive, event-driven applications on the JVM. It’s designed for high concurrency, scalability, and low-latency, with a polyglot API supporting Java, Kotlin, Groovy, and more. Vert.x reached the peak of its popularity in the mid-2010s, especially as “reactive” architectures gained traction in the Java world. It was frequently pitched as a lightweight, callback-driven alternative to heavy Java EE stacks, particularly after joining the Eclipse Foundation in 2013–2014. While it’s since become a more niche choice with the rise of frameworks like Spring WebFlux and Quarkus, Vert.x remains influential in the world of non-blocking, asynchronous Java programming.

However, that time is finally over. On May 15, Vert.x 5 was released and decisively cutting the cord with its old callback-driven model: the entire API now revolves around Future constructs, and the new core class VerticleBase (plus the functional Deployable interface) lets you manage startup and shutdown logic in a single lambda. The project has also gained full-fledged JPMS support, so Vert.x libraries are now explicit Java modules, making it easier to build slim, modular apps on Java 21+.

The biggest functional leap is in communication and I/O. The overhauled gRPC implementation now supports gRPC-Web, REST transcoding, JSON message formats, built-in reflection, and health checks out of the box. The idiomatic code generator outputs Future-ready classes. The core has adopted native io_uring from Netty 4.2, and there’s now built-in client-side load balancing, a new Service Resolver, and an improved HTTP proxy that brings WebSocket interceptors and a pluggable cache. Release notes also highlight the new vertx-virtual-threads module, enabling use of JDK virtual threads without external dependencies.

On the tooling side, there’s a new Application Launcher - replacing the old io.vertx.core.Launcher - and Maven Plugin 2.0, which supports both Vert.x 4 and 5 for smooth, staged migrations. The team also announced several “sunsets”: Netty-based gRPC, the JDBC API, Service Discovery, OpenTracing, and Vert.x Unit are being deprecated and will be removed in the next major version, while Vert.x Sync is already gone (with blocking functionality now handled by virtual threads).

In short, Vert.x 5 means a sleeker API, faster I/O, and a toolchain designed to move existing projects forward without turbulence.

Hibernate 7.0

And sticking with frameworks whose glory days (or “fan favorite” status) are behind them, it’s time for Hibernate 7.0.

Hibernate ORM 7.0.0 marks a new chapter - but mostly on the formal side: the project is now licensed under Apache 2.0, and Java 17 is the new minimum requirement. At the same time, the engine fully implements Jakarta Persistence 3.2, so applications need to use the jakarta.* namespace and can easily run tests on Java 21 as well.

In day-to-day development, you’ll immediately notice a few long-awaited mapping improvements. @SoftDelete now has a TIMESTAMP mode, so you can record the exact moment an entity was “soft-deleted.” The new @EmbeddedColumnNaming annotation lets you automatically assign consistent prefixes to embedded field columns. Plus, @NamedEntityGraph allows you to define complex fetch graphs directly in annotations - no more XML or separate methods.

On the querying and performance side, there’s a new (incubating) QuerySpecification/Restriction/Range API, a set of strongly-typed Find/Lock/RefreshOption objects instead of string “hints,” and handy findMultiple() and getMultiple() methods for fetching entity batches in JDBC-sized chunks. HQL now supports the full suite of JSON and XML functions aligned with the SQL standard, and applications can peek into the first-level cache via Session.getManagedEntities(), which makes diagnostics and context cleanup a lot easier.

So yeah, you are right, most of the new features are annotations 😅. That explains a lot about the current state of Hibernate following. Still better than XML.

Gradle 8.14

Gradle 8.14, released on April 25 (so it's technically April-thing, but I missed it last time), raises the compatibility bar: both the daemon and the entire toolchain infrastructure now support Java 24 right out of the box. A new toolchain extension can also filter and select only those JDKs that provide GraalVM Native Image. Test reports are now clearer too—when a scenario is skipped due to the Assumptions API, the reason is included in both the HTML report and JUnit XML files.

On the performance front, dependency configuration is now initialized lazily, which shortens the configuration phase and reduces memory usage. The enhanced Problems API lets you attach arbitrarily complex data to problem reports, so IDEs can display the full diagnostic context. For those using the configuration cache, there’s a new Integrity Check mode - stricter validation with detailed messages to help debug tricky serialization errors.

Amper Update – May 2025

Last but not least, Amper 0.7.0, released at the end of May , shifts the focus to its standalone build engine: the Gradle-based variant now lands in the “deprecated” section, while the standalone engine gains full support for Ktor and Spring. By simply enabling settings.ktor: enabled or springBoot: enabled, Amper automatically adds the no-arg/all-open plugins, sets up BOMs, and can build a runnable JAR - so new server projects are ready to go without manual scripting.

The latest release also makes another leap in multiplatform support: joining Android and Desktop, iOS is now supported (with static frameworks and “run” on the simulator), and the default Compose Multiplatform version is bumped to 1.8.0. Everything can now be run and packaged using only Amper commands - no extra tools required.

On the ergonomics front, diagnostics are clearer - warnings about overridden dependency versions, precise errors when a library doesn’t support all platforms, and a new “Add dependency” quick-fix when an unresolved symbol comes from another module. The engine now detects cycles in the task and module graphs, can add telemetry for dependency resolution, and on internal errors, you get a concise message with guidance, instead of a stack trace.

Scala 3.7

Scala 3.7.0 (released May 7, 2025) brings two long-awaited features to general availability. As we read in post from Wojciech Mazur from VirtusLab, named tuples ((x = 1, y = 2)) are now out of experimental status, so you can return them from methods, filter fields by name, and build lightweight structural types without needing macros. The new @publicInBinary annotation addresses a common pain point for library authors: inlined methods can now reference protected fields without worrying that helper accessors will disappear during subsequent compilations, preserving binary compatibility.

A conceptual innovation in this release is “preview features” - fully implemented SIPs that might still undergo minor tweaks. Enabled globally with the -preview flag, these features currently include “Better Fors” (you can start a for comprehension with an assignment), while in experimental mode, you’ll find things like @unroll (adding default parameters without breaking the ABI) and the ability to treat package objects as ordinary values. In other words: the language opens new doors for the curious, but with a clear warning for production library maintainers. A bit like Java does.

On the tools and platform side, Scala 3.7 changes the priority of given instance selection, absorbs the “expression compiler” (used by Metals and IntelliJ), unlocks compilation for Android, improves the precision of -Wunused warnings, and lets you pull in dependencies via :jar in the REPL. Rounding things out, JS/CLI dependencies have been updated to the latest versions. All told, this release offers just as many new toys as it does migration tools, serving as a convenient stopping point before the next major language update planned for 3.8.

But we are not there yet! There are still some all-stars I wanted to share with you 😀

3. Github (but not only) All-Stars

Embabel

Embabel is a brand-new open-source project from Rod Johnson (the creator of Spring!) that aims to bring an agent-based approach to working with LLMs straight into the JVM world.

However, Embabel approach is interesting one. At the core of Embabel is the planning phase: the framework automatically discovers the goals and available actions in your code, then, using a deterministic, explainable algorithm (not just another LLM), lays out a plan to fulfill the request. This approach lets you mix and match language models, inject guardrails, break tasks down into fault-tolerant and scalable sub-agents, and easily combine them into "federations." The application domain is represented as typed records or data classes, so your prompts remain refactoring-safe, and you can expose any method as both a tool for the model and a standard Spring component.

Here yoy will find a simplified example from the project repo:

@Agent(description = "Find news based on a person's star sign")

class StarNewsFinder {

@Action

fun extractPerson(input: String): StarPerson =

PromptRunner().createObject("Get the person's name and star sign from: $input")

@Action

fun getHoroscope(person: StarPerson): Horoscope =

Horoscope(horoscopeService.dailyHoroscope(person.sign))

@Action(toolGroups = [ToolGroup.WEB])

fun findNews(person: StarPerson, horoscope: Horoscope): RelevantNewsStories =

PromptRunner().createObject(

"""

${person.name} is a ${person.sign}.

Today's horoscope: ${horoscope.summary}

Find 3 news stories that connect to this reading, but are not about astrology.

Give a summary and a URL for each.

""".trimIndent()

)

@AchievesGoal("Write a fun summary for the person based on their horoscope and news.")

@Action

fun writeup(

person: StarPerson,

news: RelevantNewsStories,

horoscope: Horoscope,

): Writeup =

PromptRunner().withTemperature(1.2).createObject(

"""

Write something fun for ${person.name} (${person.sign}).

Start with their horoscope: ${horoscope.summary}

Then mention these news stories:

${news.items.joinToString("\n") { "- [${it.url}](${it.summary})" }}

Finish with a funny signoff.

Format as Markdown.

""".trimIndent()

)

}Rod says Embabel doesn’t just want to catch up with Python’s agent frameworks—it aims to leap ahead, offering JVM developers full transparency, extensibility, and seamless integration with the Spring ecosystem. The project is still in its early days, but it’s already open for contributions and promises that “agents” will become the new “beans” - except now they’ll talk to language models, not just databases.

Brokk

Brokk is a new, fully open-source coding platform built by Jonathan Ellis (co-creator of Apache Cassandra), aiming to bring AI to bear on truly massive codebases. The idea was born out of frustration: while traditional LLM tools do fine on small projects, they get lost in millions of lines of code.

Brokk’s answer is “compiler-grade context” - advanced static analysis that understands types and dependencies, feeding only the truly relevant slices of code to the models, so they stay effective even across sprawling codebases.

The key concept: code isn’t just text. Brokk uses a deep analysis engine (powered by Joern) to semantically navigate program structure and build precise context for AI agents - from symbol search and deep scans to linking related files. In practice, it works like an AI-native IDE: the user plans and supervises, an “Architect” agent breaks down tasks and passes them to a “Code” agent, which then generates or modifies files. Everything is observable and reversible, so the human stays in control while the model focuses on generating and refactoring code. Brokk is JVM-based, since the libraries needed for full type inference work best in that ecosystem, meaning Java and Kotlin users can plug it in without switching to Python.

Also, you need to be aware Brokk is a paid solution - you have been warned. However, they pricing model seems to be quite fair. I especially like their Fair-use billing.

I promise - I'm already planning an issue about the MCP and migration tools for JVM, however, there simply seems to be a continued influx of these...

RecordBuilder

Let's take a break from AI.

RecordBuilder is a lightweight annotation processor that fills a key gap left by Java records: it generates a builder class, a set of “with” methods for convenient copying, and—if needed—even creates the record itself from an interface. Everything happens at compile-time, with no reflection or runtime dependencies, so your code stays lean and records remain fully immutable, while you get back some of the readability you’d expect from Lombok or Immutables. - All you need is the single @RecordBuilder annotation:

@RecordBuilder

public record NameAndAge(String name, int age){}This will generate a builder class that can be used ala:

// build from components

NameAndAge n1 = NameAndAgeBuilder.builder().name(aName).age(anAge).build();

// generate a copy with a changed value

NameAndAge n2 = NameAndAgeBuilder.builder(n1).age(newAge).build(); // name is the same as the name in n1

// pass to other methods to set components

var builder = new NameAndAgeBuilder();

setName(builder);

setAge(builder);

NameAndAge n3 = builder.build();This lets you gradually migrate your DTOs to records without giving up the builder pattern’s convenience, gaining native withers and avoiding the boilerplate of manual copy methods or canonical constructors for objects with many fields.

Like it, seems a little bit useful one.

Workflows4s

And in the end, we had new Scala, so let's wrap it with Scala library!

Workflows4s by is a new Scala library by Wojciech Pituła that lets you model business workflows as regular Scala code: every step is described with compile-time-checked expressions, and the whole process becomes a function you can run, test, and refactor just like any other part of your app. It was born after three years of experimenting with Camunda, Temporal, and custom event engines, all to make it easier to design long-running, event-driven, and stateful operations - without offloading them to an external orchestrator.

object Context extends WorkflowContext {

override type State = String

}

import Context.*

// define the workflow

val hello = WIO.pure("Hello").autoNamed

val world = WIO.pure.makeFrom[String].value(_ + " World!").autoNamed

val workf = hello >>> world

// render workflow definition

println(MermaidRenderer.renderWorkflow(workf.toProgress).toViewUrl)

// run the workflow

val runtime = InMemorySyncRuntime.default[Context.Ctx](workf, initialState = "")

val wfInstance = runtime.createInstance(())

wfInstance.wakeup()

println(wfInstance.queryState())

// Hello World!

// render instance progress

println(MermaidRenderer.renderWorkflow(wfInstance.getProgress).toViewUrl)The core is event sourcing (your system design interviewer already like it): every non-deterministic action records an event, so you can replay, analyze, or restore workflows after a failure, with full visibility into their history. The library is “serverless” - all it needs is a database. You can execute workflows in-memory, with Pekko (free Akka), or on Postgres, with no need to set up a dedicated server.

May was kinda... crazy. In a positive way, as it was packed with a lot of fascinating announcements. Hope we will have as many great releases in June before the holiday break.

And meanwhile - see you next week!

That's a nice piece of writing right here. Thanks a lot for your great work.

Wow, that’s an epic issue, filled with so many great things. Nice job. I still can’t believe, though, that they’re finally going to build a JSON parser into the library, after the XML “issues”. I guess I’ll believe it when it actually happens.