Maven Central introduces Rate Limits to prevent Tragedy of the Commons - JVM Weekly vol. 90

This time, game theory in practice, Truffle, and our classic Release Radar - it's been a while.

1. Maven Central implements Bandwidth Limits to curb resource misuse by heavy users

Once again, it turns out we simply can't have nice things.

Maven Central is a super important place for the Java community - I probably don't need to convince anyone of that. After all, since the closure of JCenter by Bintray, it's the central repository where you can find most of the libraries and tools for the JVM. Despite some competition (note that mvnrepository.com is not competition, just a site indexing artifacts from various repositories, including Maven Central), it has become the default place where every new library ends up. Last year, Maven Central boasted over 1 trillion downloads, showing just how popular and important it is.

At the same time, Maven Central is funded by Sonatype, a company specializing in open source component management. Sonatype manages the infrastructure, verification, and publication of artifacts, ensuring the stability, security, and availability of the repository. They do this to support the global developer community while strengthening their position as a leader in this field.

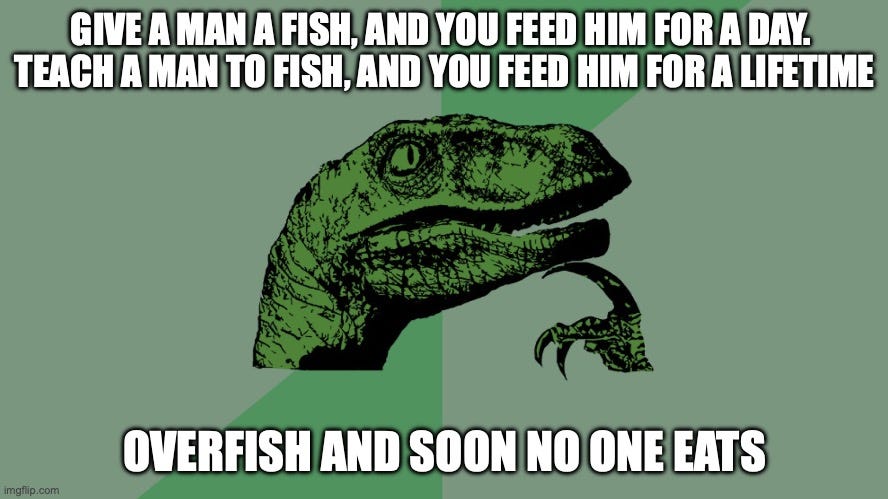

However, Maven Central encountered a significant problem related to resource consumption, as Sonatype writes in their post Maven Central and The Tragedy of the Commons. The company's analysis showed that 83% of the total bandwidth is consumed by only 1% of IP addresses, mainly from the largest companies in the world. This disproportionate use illustrates the so-called tragedy of the commons - known to all game theory enthusiasts - where a few users excessively exploit a shared resource, leading to its potential depletion and affecting the entire community. Common use of Maven Central without proper caching practices further exacerbates this problem, increasing the load on its infrastructure and highlighting the need for a sustainable solution.

Therefore, Maven Central decided to implement restrictions for the heaviest users. This means that those who download the most will have their download speeds slowed down to reduce their impact on the repository. Additionally, organizations are encouraged to use corporate repository managers, such as Sonatype's own Nexus Repository, which can significantly reduce the load on shared infrastructure by storing frequently used artifacts locally. With these steps, Maven Central aims to ensure the long-term sustainability of its resources, minimizing disruptions for the community.

I'll note right away that this is a somewhat exaggerated comparison, but the whole situation reminds me of the abuse of free tiers of certain services (like GitLab) for cryptocurrency mining. In both cases, we are dealing with the misuse of resources provided by specific companies to the community in good faith (even if in both cases the goal was to promote those companies' services), which is exploited, forcing some form of throttling (the one for Maven Central is still much lighter).

Organizations that suspect they are subject to these restrictions (read: their builds suddenly slowed down) can contact Maven Central for further assistance and alternative solutions, so if I have any free riders among my readers - you know what to do.

2. How Truffle outshines V8 Sandbox in security and performance (at least according to Truffle)

Because I have probably written the same amount of JavaScript code commercially in my life as I have Java, combining these worlds with Truffle is one of my favorite topics in the entire JVM and a the one I have traveled with to meetups and conferences earlier this year. However, I rarely get the chance to write about Truffle because it's one of those less touched projects and additionally highly misunderstood in the community. Therefore, I am very pleased with publications like Writing Truly Memory Safe JIT Compilers by Mike Hearn, republished by official GraalVM Blog, which place Truffle in a broader context. But before we get into that, let's first discuss a bit about this context. We need to talk about V8.

V8 is an open-source JavaScript engine developed by Google, used in the Chrome browser and many other applications like Node.js. Its main goal is to execute JavaScript code quickly through Just-In-Time compilatioxn, which significantly increases the performance of web applications. Last month, the V8 team introduced the V8 Sandbox, a security enclave designed to mitigate browser exploits arising from errors in the JIT compiler. These vulnerabilities often lead to memory safety issues, which are a major source of Chrome exploits. This is because V8 is written in C++, making it susceptible to such bugs, but the complexity of these vulnerabilities means they are not just classic memory corruption issues like use-after-free errors. Instead, they result from subtle logic bugs that can corrupt memory, rendering existing memory safety solutions largely ineffective.

Here enters the GraalVM JavaScript engine, GraalJS, written in Java using the aforementioned Truffle framework. Despite initial concerns that it might suffer from the same class of bugs as V8, according to the author of the article, GraalJS demonstrates increased memory safety. This is achieved thanks to a completely different architecture, which, when explained, will help you better understand Truffle. Instead of relying on separate implementations for the interpreter and the JIT compiler, Truffle allows developers to write the interpreter in Java. This interpreter is memory-safe because it uses Java's Garbage Collection and Memory Barriers mechanisms. When hot-spots in the code are identified, the same Graal compiler that transforms the interpreter into native code is used to compile user methods, ensuring consistent and safe execution.

Truffle achieves this through partial evaluation, which transforms interpreter code into compiled JIT user methods while maintaining memory safety. By using final variables for compilation and optimizing constant folding, Truffle ensures that the generated machine code matches the behavior of the interpreter and remains memory-safe. This approach not only simplifies the development of language runtime environments but also eliminates a significant class of subtle memory safety-related bugs. The result is (if we believe the GraalVM team) a more secure JavaScript engine that can seamlessly handle JIT compilation without introducing vulnerabilities typical of traditional virtual machines like V8.

The article Writing Truly Memory Safe JIT Compilers was created as a kind of response to the V8 Sandbox announcement and serves as an excellent introduction for anyone wanting to better understand what Truffle is. If the above paragraphs were interesting to you, I suggest reading the entire article. Adding to this is the fact that Thomas Wuerthinger, one of the original creators of GraalVM and Truffle and the lead of the entire project, worked on V8 and played a significant role in creating the original optimizing V8 compiler called Crankshaft, which gives the whole story an additional layer of intrigue.

Despite my great fondness for Truffle, for completeness, I would very much like to read a response from the V8 team.

3. Release Radar

Jenkins to Require JDK 17

Let's start today's radar with Jenkins, which is about to make a significant leap.

Starting from the weekly release of Jenkins 2.463 on June 18th (it seems the creators are quite fond of the release train concept), Jenkins will require JDK 17 or newer for both controllers and JVM agents, as reported by Basil Crow, a long-time Jenkins user and contributor. While the LTS line 2.452.x will still require JDK 11 as the minimum, the first LTS release requiring Java 17 will appear at the end of October 2024. This change is driven by the end of support for Spring Framework 5.3.x on August 31, 2024, and the need to upgrade to Spring Security 6.x, which requires Java 17, Jetty 12, and Jakarta EE 9.

It's clear how JDK 17 is spreading virally throughout the ecosystem.

Jenkins users should ensure that both their controllers and agents are running on Java 17 or newer. Official Jenkins Docker images already include JDK 17, and before upgrading to Jenkins 2.463, it's essential to check agent compatibility using the Versions Node Monitors plugin.

Java on Visual Studio Code Update – June 2024

This week, we also have June Visual Studio Code for Java update published by Nick Zhu ( Senior Product Manager from Microsoft. Key changes include enhancements to the project settings page with new sections for the compiler, Maven profiles, and code formatting settings. These changes provide developers with more convenient ways to manage project configurations directly from the status bar or command palette. Additionally, the update brings improvements to the Test Coverage feature, allowing Java developers to easily run tests with coverage.

As for integrations with the broader ecosystem, Spring users should be pleased. The update adds support for the @DependsOn annotation, improving navigation and assistance in defining dependencies between beans. Validation for JPQL and HQL queries used in @Query annotations has also been integrated, helping developers quickly identify errors in their queries. Moreover, support for updating Spring Boot projects via the Spring Boot Extension Pack has been enhanced to include Spring Boot 3.3.

TLS 1.3 Fixes in JDK 1.8

We'll stay with Microsoft, but now it's something unusual - let's talk about new features introduced to... JDK 8.

Transport Layer Security (TLS) 1.3, the latest version of the TLS protocol, offers significant improvements in security, performance, and simplicity compared to its predecessors, making it one of the most widely used network protocols. Therefore, Microsoft, as part of Azure cloud, introduces TLS 1.3 support in many of its services, some of which now have it as the default protocol.

However, with the increased adoption of TLS 1.3, the company observed performance issues in Java 8 applications that did not occur with JDK 11. The problem was identified as a bug in the early implementation of TLS 1.3 in JDK 11, which was fixed in JDK 20 and backported to JDK 11 and JDK 17. Recognizing the need to support Java 8 applications (let this version die already), the Azure SDK team worked on backporting this fix to JDK 8. After resolving several related issues, the developers achieved backporting and significant performance improvements, increasing request throughput from 500 rps to 15,000 rps in test cases, as informed by Bruno Borges, Principal Product Manager from Microsoft. These fixes will appear in the upcoming July updates of JDK 8 from Eclipse Temurin and other JDK distributions.

And since we're on the topic of TLS, let's smoothly move on to the next point.

Quarkus 3.12

The main feature of Quarkus 3.12, announced by Guillaume Smet is the introduction of the new TLS registry. It centralizes the management of TLS configurations, allowing the pre-definition of multiple parallel configurations that can later be used by individual extensions such as REST Client, Mailer, Redis, and WebSockets Next. The centralization aims to ensure consistent security management across the entire platform, where there were previously no specific guidelines in this regard.

Additionally, version 3.12 introduces several other extensions. The first is quarkus-load-shedding, which helps manage excessive service loads from individual clients, capable of traffic shaping and rejecting excessive requests. Quarkus JFR allows the addition of custom Quarkus events to Java Flight Recorder. Moreover, the Container Image extension now supports Podman out-of-the-box, offering an alternative to Docker.

Other significant updates in Quarkus 3.12 include the Native Image agent, which simplifies the configuration of native images based on integration tests, particularly helpful for complex applications. This integration makes the transition from JVM to native less cumbersome, though it may still require some adjustments. Spring integration has also been improved, thanks to the adaptation of the Spring compatibility layer to the Spring Boot 3 API. Kotlin support has been updated to version Kotlin 2.0.

Other new features can be found in the Release Notes.

Vaadin 24.4

Finally, Vaadin. It returns with the 24.4 release fighting for simplicity, as Joonas Lehtinen, the project's CPO, titled the release notes. Vaadin 24.4 indeed delivers on this promise by unifying two frameworks for building web applications based on Vaadin: Flow and Hilla, which previously offered different approaches. Until now, Flow allowed writing user interfaces in pure Java and provided a productive server-side development environment, while Hilla supported applications with a Java backend and a React frontend. In the new Vaadin update, by incorporating Hilla into the core platform, developers can finally combine both approaches in one project and choose their preferred level of abstraction, freely mixing Java (Flow) and React (Hilla) views and components. The entire philosophy is well described in the post accompanying the release.

New also is Vaadin Copilot, an AI assistant for creating user interfaces, which allows real-time UI editing and communication in natural language with code updates on the fly. Built on the GPT-4 model from OpenAI, Copilot offers advanced assistance, and the integrated undo feature with IntelliJ and VS Code ensures project control. It reminds me of anything but a "fight for simplicity," but maybe there's a method to this madness.

Additionally, Vaadin 24.4 offers several other enhancements: file-based routing for React views for better project structure, the frontend folder moved to src/main/frontend for a more organized layout, and Vaadin Flow Grid now supports Java records. The update also includes support for async/await in executeJs methods.