Jakarta Agents, Betrayal of Silicon, and Art of Autopsy with jcmd - JVM Weekly vol. 150

Today, again, three different, very interesting topics 😊

1. The arms race in the AI world: Jakarta, MicroProfile, and the new vision from the creator of Spring

I intentionally skipped Agents in the last AI piece - saved them for dessert, and it’s finally time to dig in. 😉

We’ll start at what many might see as the far end of the spectrum, with something that has remained in the DNA of Java Enterprise for years: standardization. Under the umbrella of the Eclipse Foundation, initiated by Payara , Reza Rahman , and key ecosystem figures, the Jakarta Agentic AI project was born. Its goal is as ambitious as it is characteristic of Jakarta: it’s not about creating yet another framework (we already have a few of those—more on that in a moment), but about defining a common language and rules of engagement for all players. The project focuses on specifying vendor-neutral APIs and shared lifecycles for “agent” workloads, building on solid foundations like CDI and REST. This is a long game - slow and methodical, but with the potential to shape the market for years.

Alright, but how is it supposed to work in practice? A developer declares an agent as a CDI-managed component and describes its behavior with annotations as well as the action “flow” via a fluent API (with an optional YAML/XML plugin), while the Jakarta runtime provides lifecycle management, dependency injection, and integrations with other specifications (REST, JSON-B, Transactions, Messaging, etc.). Access to LLMs is not standardized—the spec aims to offer a minimal façade and allow delegating to existing libraries (e.g., LangChain4j, Spring AI). Configuration is intended to go through Jakarta Config (or MicroProfile Config), metrics/traces via OpenTelemetry, and implementations should also be usable on Quarkus/Micronaut/Spring, even though Jakarta EE remains the first-class environment. For conformance, a TCK is planned and, initially, a separate standalone specification (with a view toward a future “AI profile” 😁). It sounds sensible and pragmatic - lots of bridges rather than poured concrete - so I’m cautiously optimistic, though as usual the key will be the quality of the first implementations and vendor adoption.

Standards can be tricky, but Java has a surprisingly good track record in this area.

The MicroProfile community approaches the topic very differently. Instead of drafting large specifications from scratch, the working group - which includes engineers from IBM, Red Hat, Microsoft, and Oracle - rolled up their sleeves and focused on pragmatic integration. Reading their working meeting notes of Microprofile AI initiative offers a fascinating look into “trench work.” Rather than pondering the theoretically best interface for systems, developers are grappling with real problems: how to integrate the popular LangChain4j library with the CDI world, how to handle the instability of its API, how to avoid lock-in to a specific implementation (e.g., SmallRye), and how to ensure integration with other specifications like Fault Tolerance. This is the other end of the spectrum - the MicroProfile philosophy: be lightweight and agile, and deliver working solutions here and now, even if that means building bridges to tools that haven’t fully matured yet.

One might wonder whether the agent ecosystem is mature enough to start introducing standards, but my sense is that today’s apparent rapid progress is partly an illusion of progress. Yes, there’s a plethora of techniques optimizing the underlying machinery, but a certain core (Guardrails, Evals, Memory, RAG, MCP) is going to stick around. Promoting the narrative that “things are changing so fast we can’t keep up” turns the whole area into an inaccessible toy for anyone other than hobbyists and startups, pushing it out of reach of typical enterprise operating modes. By introducing such standardization, Java can address the needs of its core market quite well.

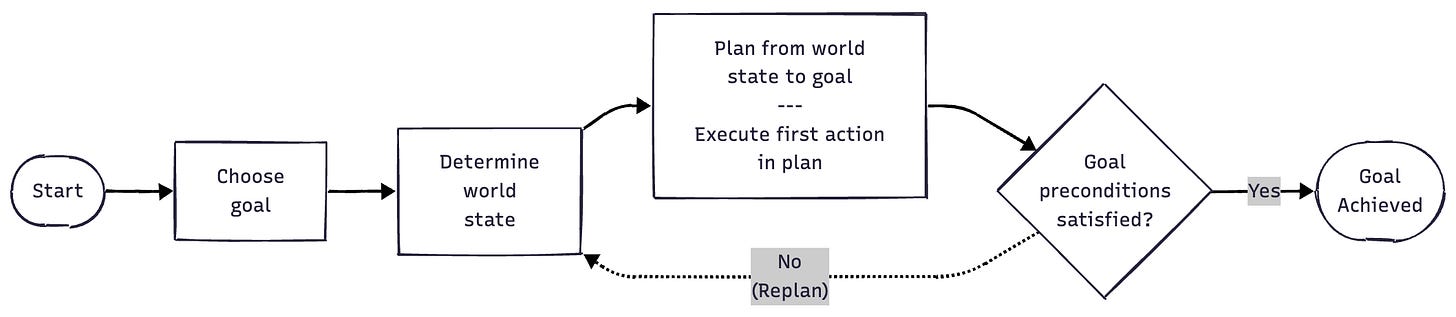

That said, I have no doubt that the fundamental nature of AI agents is different—or at least that’s the premise of Rod Johnson , the creator of the Spring Framework, who’s back with a new project called Embabel. Embabel is not yet another low-level library but a high-level framework built on top of Spring AI, designed to tackle the biggest pain points of production AI: non-determinism and the difficulty of testing. At the heart of Embabel is a planning mechanism based on the GOAP (Goal-Oriented Action Planning) algorithm rather than the whims of a language model.

GOAP works such that, instead of relying on a language model’s “good mood,” you first declare what you want to achieve (the goal state), and only then does a planner figure out how to get there by arranging the lowest-cost sequence of actions that satisfy preconditions and change the world with predictable effects. The LLM remains a tool inside actions (summarize, refactor, generate code), but it’s the planner - with costs, heuristics, and re-planning - that holds the reins.

The result? Determinism at the flow level, testability (action contracts are checkable), cost control (tokens/time/risk built into the cost function), and the ability to integrate with enterprise SLOs, caches, guardrails, and evals. In other words: GOAP provides a skeleton that lets you “tame” AI non-determinism without extinguishing its power - exactly the compromise that’s been missing for agents to leave the lab and enter production processes in a stable way. You can learn more in a very good talk:

History may be coming full circle. Two decades ago, Rod Johnson created Spring as a response to J2EE’s complexity. Today, he’s building Embabel to bring engineering discipline to the chaotic world of intelligent agents. Which naturally raises the question: how do we do this in Java today - without waiting for final standards and without immediately building a full GOAP-style planner? We have another option: langchain4j and its langchain4j-agentic.

Meme stolen from Konrad Bujak - couldn’t resist, man.

langchain4j-agentic is an agent orchestration framework, largely developed by Mario Fusco, extending LangChain4j, which focuses on simplifying connections to LLMs and easy switching between them. The foundation of agent operation is the AI Service, which provides the tools needed to work with verbose and non-deterministic LLMs, including: structuring (sending and receiving Java objects), guard rails (I/O control), instructions, memory management, observability, a content retriever (to fetch knowledge from databases or documents), and tools that the model can invoke. Agents in the langchain4j sense are primarily wrappers around the AI Service, created to enable smooth embedding in workflows.

The framework supports three agent types: AI Agents (with an LLM at the core), Non-AI Agents (regular deterministic code, recommended as a faster and cheaper option when an LLM isn’t needed), and Human-in-the-Loop Agents (requiring human intervention or approval). Orchestration can be performed deterministically (workflow), where code determines the order of agent calls and data flow (e.g., sequences, parallel calls, loops, conditionals), allowing composed workflows; or via self-orchestration, where a supervising agent dynamically decides when to invoke a subordinate agent—useful in less well-defined processes like travel planning. All variables circulating in the agent system (inputs, intermediates, outputs) are managed within a single, overarching Agentic Scope, which also serves as the conversation context.

You can learn more about LangChain4j Agents from a talk by Lize Raes, Developer Advocate Java and AI at Oracle, from Devoxx BE.

And since we’re on Devoxx talks, one more: Paul Sandoz presented Java for AI - a superb survey of all the techniques currently being developed at the JVM level - what he calls things that cannot be added to Java purely via libraries. In my view, it’s required viewing today for anyone who wants to understand, with very concrete examples, how individual new Java initiatives map to existing AI project problems.

Best way to spend the hour today 😁.

2. On ARM: Why Your Processor Sometimes Lies

Building abstractions as powerful as AI agents is exciting, but it’s easy to forget that underneath there’s a complex - and sometimes treacherous - dance of electrons. Recently, we had a chance to see what happens when that dance stumbles and the abstraction we rely on starts to leak.

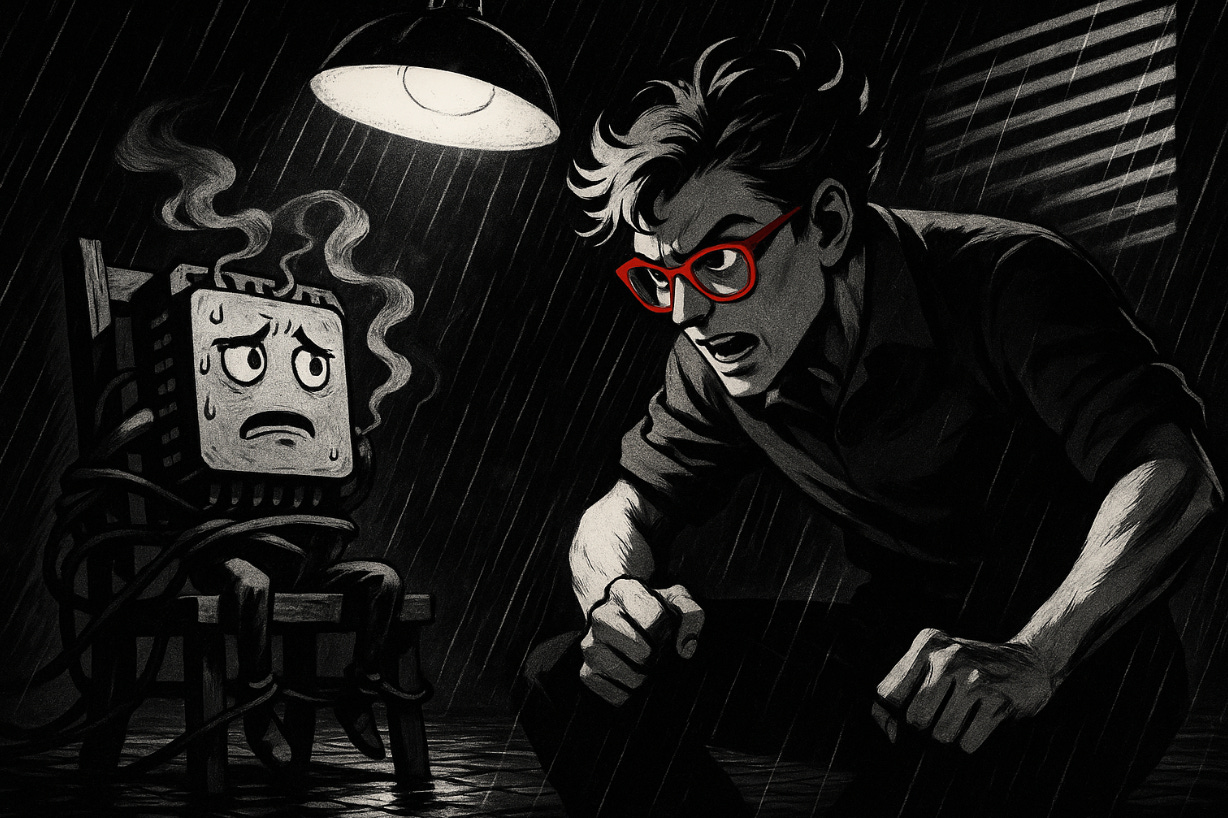

Imagine a locked-room murder mystery. Impossible things are happening in your application. Code that is 100% certain an object isn’t null suddenly throws a NullPointerException. Conditional branches get executed that logically should never be reached. The application freezes at random, and the garbage collector or interpreter logs show errors about corrupted object pointers (the so-called “oops”).

This isn’t a typical programming bug, as it might seem—it’s something much deeper and more insidious. Engineers working with the JVM on the AArch64 (ARM) architecture encountered exactly these symptoms. The bug, labeled JDK-8369506, was rare but critical and indicated that something fundamental was breaking the coherence of the Java heap. The trail led not to application code, but to the very heart of the JVM–CPU interaction.

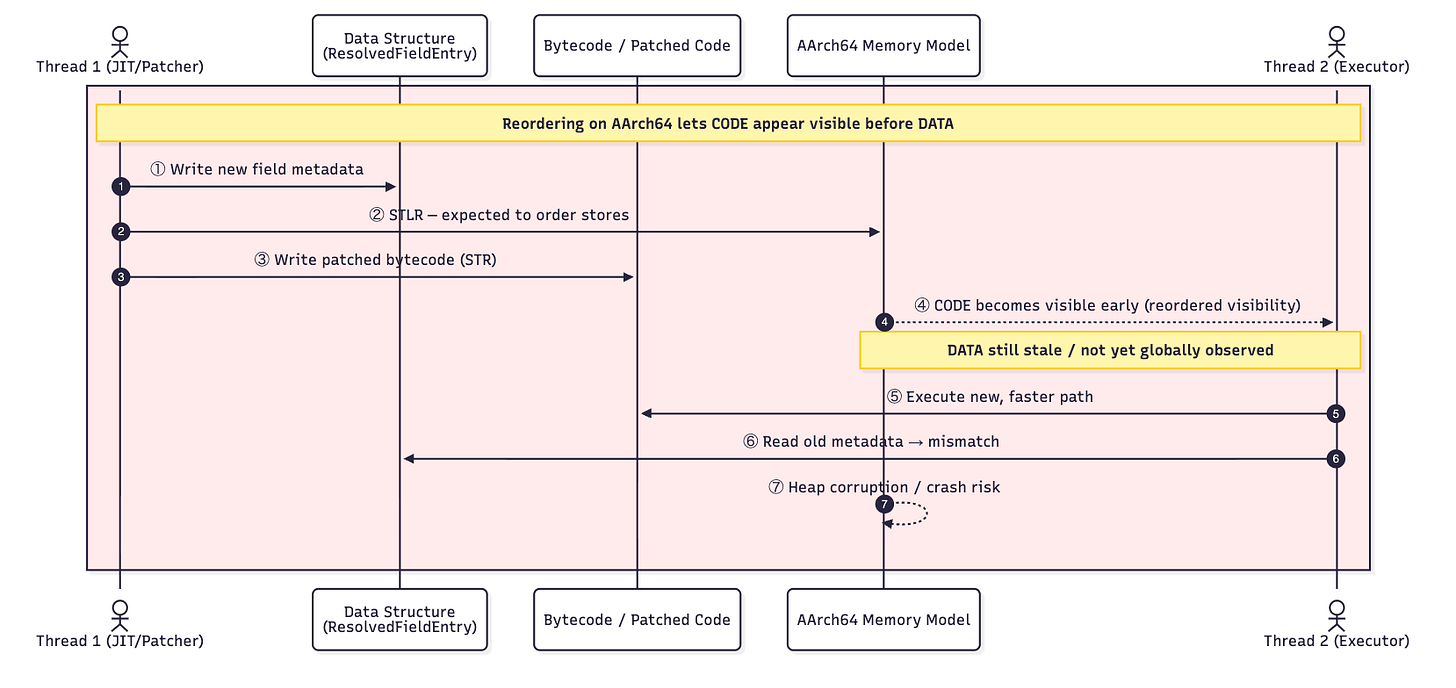

At the root of the problem lies a JVM optimization called RewriteBytecodes and a characteristic of ARM processors known as the “weak memory model.”

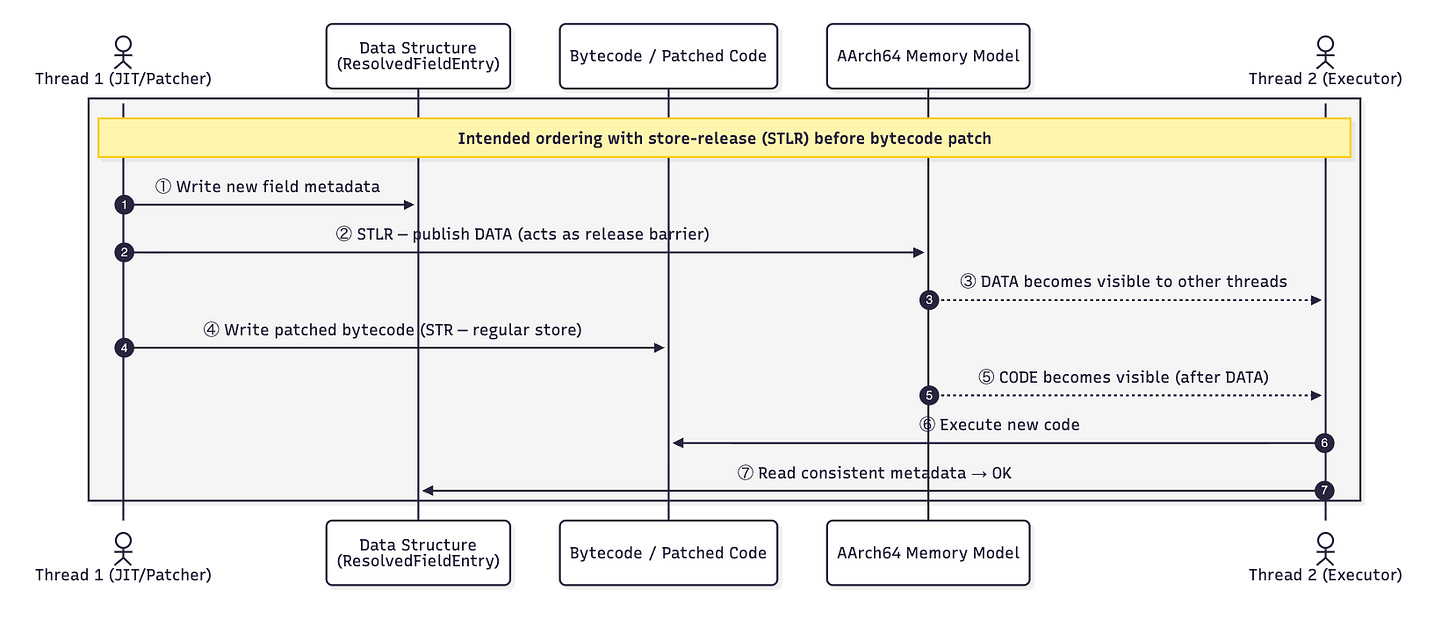

In simplified terms, when the JVM executes a certain piece of code for the first time, it may “rewrite” it on the fly so that subsequent executions are faster. This bytecode update process has two key steps: first, the data structure describing the field (ResolvedFieldEntry) is updated; then the actual modification of the code itself follows.

The first step uses a special processor instruction (STLR), which should act as a memory barrier - a signal to other threads that the operation has completed and the data is consistent. The second step is a regular memory store (STR).

And here we get to the crux of the issue. In the name of maximum performance, AArch64 processors allow themselves to reorder operations if they deem it harmless to the outcome. In this case, the processor was catastrophically wrong. Situations occurred where a second thread could observe the modified bytecode (step two) before it saw the fully updated data structure (step one). As a result, it tried to execute the new, faster code while operating on incomplete metadata, which could led to chaos and corruption of the Java heap.

The fix was to add an explicit memory barrier in the JVM code, forcing the processor to maintain the correct ordering. It’s like putting up a “stop” sign the processor cannot ignore. The issue is still open for Arm32.

I’m curious now how much this impacts performance.

I’m sharing this because this bug (which the JDK engineers disclosed last week) is a textbook example of a “leaky abstraction.” Java’s “write once, run anywhere” promise is supposed to shield us from details like the processor’s memory model. Yet this story shows that our high-level applications are always at the mercy of the physical laws governing silicon - or rather, the ISA built on top of it.

BTW: I was forced to do a bit of analysis as I need to update my Arm talk for Øredev ... Perfect timing 😜

3. jcmd as Coroner: JEP 528 and New Life After JVM Death

Fixing such a devious bug is an engineering triumph. But what if the worst has already happened and we’re left with the digital corpse of our application in the form of a core dump file? For years, JVM autopsy (yes, I’ve recently gone back to reading Sherlock Holmes - thanks for asking) was a grim and frustrating chore, but a brilliantly simple solution has appeared on the horizon.

A bit of context - when the JVM crashes at the native level, the operating system writes its entire memory state to a file. Analyzing this file, known as post-mortem analysis, has so far been a nightmare for two reasons.

The first option is to use native debuggers like gdb. They’re powerful, but “blind to Java.” They see raw memory addresses but have no understanding of JVM data structures. The second option is the jhsdb tool, which could interpret those structures, but its code was outdated, brittle, and a maintenance nightmare.

JEP 528 proposes a new, better approach: extend the well-known and well-liked jcmd tool - used to diagnose live JVM processes - so it can also analyze dead ones. The key is a brilliant “revival” technique. jcmd creates a new, empty subprocess and then maps memory segments from the core dump file into its address space, preserving their original addresses. The result is a “zombie” JVM. It doesn’t execute code, but all its internal data structures are perfectly reconstructed.

Here’s the core of the genius: jcmd can now invoke the exact same native JVM functions it uses to diagnose a live instance in order to query this “zombie.” The diagnostic code doesn’t need to know whether the target is alive or dead, which drastically lowers tool maintenance costs and guarantees the diagnostician is always perfectly in sync with the JVM version that crashed.

JEP 528 is therefore a deep, foundational investment in serviceability - the ability to diagnose and fix problems in the worst-case scenarios. This emphasis on solid, reliable tooling is a hallmark of a mature platform.

PS: Since we’re on maturity and tools, have a look at the latest progress in Project Leyden. Its goal is to introduce static Java images, which could drastically reduce application startup times. It’s another quiet revolution happening behind the scenes that could fundamentally change how we think about deploying JVM applications. Yesterday new JVMLS video by Dan Heidinga was finally published, talking about recent developments of Leyden .

PS2: Yes, I’m still waiting for all the JVMLS videos to drop so I can write them up—maybe they’ll land by the holidays. 😁

PS3: I can already invite everyone to Jfokus in Stockholm, in February 2026. My first time in a 90-minute slot! 😁

PS4: 150 volumes done 🚀🚀🚀🚀. It was hell of the ri