Do you really know your Java? From Reddit tricks to JetBrains’ AI Language - JVM Weekly vol. 141

Once again have the chance to dive into one specific topic for the entire edition. And today’s theme will be… programming languages and their features.

Once again have the chance to dive into one specific topic for the entire edition. And today’s theme will be… programming languages and their features.

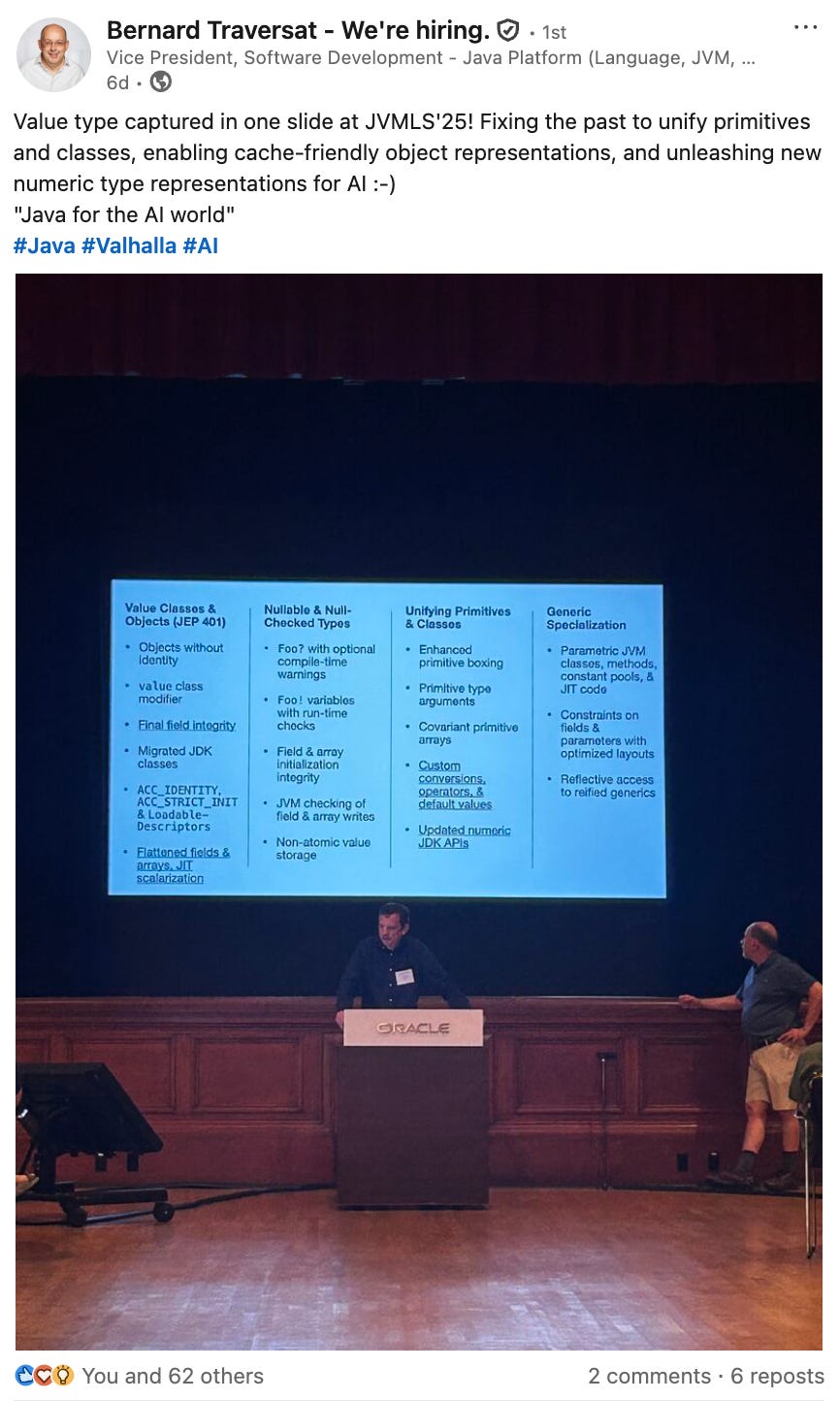

Last week, we had the JVM Language Summit (JVMLS) - one of the most interesting events in our ecosystem, where JVM creators share everything happening “on the edge.” Unfortunately, at the time of writing, there isn’t much material available yet (currently, only From Final to Immutable: The not-so-final word on final #JVMLS is online), but I still think it’s worth reflecting on the various features we already have in JVM languages and how the community perceives them — especially since there’s been a lot of discussion on that front.

I feel we’ll be returning to JVMLS more than once - I can’t wait to hear the updates on GPUs.

However, we’ll kick off today’s proper edition with something (not exactly an “announcement,” because it’s hard to call it that) very interesting - a piece of news from an interview for InfoWorld. Cause... did you know that JetBrains is working on a brand-new programming language?

Turns out they are. Kirill Skrygan, CEO of JetBrains, revealed some details about this as-yet-unnamed language, which is supposed to operate at a “higher level of abstraction” than anything we know so far - including Kotlin and Java. The idea is as ambitious as it is revolutionary: instead of writing code to implement application logic, developers would describe its architecture, ontology, and overall assumptions in a special language that, as Skrygan put it, would be “essentially English.”

That’s my "licentia poetica" talking, but I imagine what Kirill means is something similar to the philosophy behind Kotlin. Just as Kotlin was designed with features tailored for maximum IDE efficiency, this new language seems designed with prompting in mind. Imagine a scenario where you want to build a cross-platform app for iOS, Android, and the web. Instead of writing three separate applications, you create one specification document in this new language. Then, “AI agents, together with JetBrains tools, generate the code for all these platforms.”

The goal is to make AI code generation more controllable, transparent, and predictable - addressing current issues with the non-deterministic nature of large language models. The only question is whether this is still really a programming language, or already something closer to Context Engineering.

This project embodies a classic engineering trade-off between high abstraction and maintaining control and explainability of the resulting project. It’s an old problem - I still remember compiling every possible language to JavaScript and then wrestling with source maps. 😁😁

By raising the level of abstraction, JetBrains aims to boost productivity. But the same abstraction layer can hide the details you need for debugging, optimization, or handling non-standard challenges. It’s an intriguing direction, showing that the boundaries of “what programming is” are still being redefined - but we’ll see how it works out.

Programming languages are fascinating — especially the new and shiny ones, right? But let me ask a controversial question: how well do you really know your main one?

Recently, a Reddit thread caught my attention and set the tone for this whole edition: Teach Me the Craziest, Most Useful Java Features — NOT the Basic Stuff. It turned out to be a real goldmine of hard-earned knowledge from the trenches of production deployments. A great reminder that real power often lies not in the latest flashy frameworks, but in a deep understanding of the tools we already have.

Here are three examples that really stood out (sharing them there, as I know that most of you do not click the link - yes, I'm talking to you, dear reader 😜):

First - enums with abstract methods. This is an elegant way to implement the Strategy pattern without boilerplate. You define an abstract method in the enum, and each constant provides its own implementation. The result is a type-safe, concise, and readable structure for managing related but different behaviors — for example, different mathematical operations or data-processing strategies.

public enum Operation {

PLUS {

@Override

public double apply(double x, double y) {

return x + y;

}

},

MINUS {

@Override

public double apply(double x, double y) {

return x - y;

}

},

TIMES {

@Override

public double apply(double x, double y) {

return x * y;

}

},

DIVIDE {

@Override

public double apply(double x, double y) {

if (y == 0) {

throw new ArithmeticException("Cannot divide by zero");

}

return x / y;

}

};

public abstract double apply(double x, double y);

}Second - remote debugging. It’s been around for years, yet many developers still treat it like black magic. The thread pointed out that attaching your IDE’s debugger to a running application in production or test environments “isn’t much harder than configuring JMX.” Crucially, modern IDEs let you configure breakpoints that pause only the specific thread handling a given request, not the entire application — making it an extremely powerful and practical tool for diagnosing live systems. I used it back in my first job, and yes, it’s incredibly useful.

Third - dedicated classes for identifiers. Another pattern I really value (especially in Kotlin, where they can be type aliases). Instead of passing IDs as raw primitives (like String or long), you wrap them in simple dedicated classes, like AccountId or PurchaseId. Why? Because the compiler won’t catch a mistake if you accidentally pass a purchaseId to a method expecting an accountId. But if the method signature is processPurchase(AccountId accountId), that mistake becomes impossible. This is a mature programming approach, using types as a safety net for both the compiler and future developers.

public void transferFunds(long sourceAccountId, long destinationAccountId, double amount) {

}

vs

public record AccountId(long value) {}

public record PurchaseId(long value) {}

public void processPurchase(AccountId accountId, PurchaseId purchaseId) {

}

As the thread notes, we’re eagerly awaiting value classes from Project Valhalla, which should significantly reduce the overhead of this pattern.

These examples show that Java still holds a huge, often untapped potential. Before rushing toward the next shiny new feature, it’s worth asking ourselves: have we really squeezed everything out of what we already have at our fingertips?

And speaking of Reddit, another post popped up there recently that sparked an interesting discussion about Structured Concurrency. The author, initially enthusiastic about Structured Concurrency (SC), came to the conclusion - after a closer look at the latest JEP proposals -that the API, in its current form, is too complex and contains too many “sharp edges” for common use cases.

His critique is precise and serves as a textbook example of valuable community feedback (I’m actually surprised to see it on Reddit rather than on the loom-dev mailing list). He points out specific pitfalls a programmer might fall into: forgetting to call join(), the lack of idempotence in that method, the risk of calling get() on a subtask before join(), or calling fork() after join(). All of this adds up to an API that demands a high level of discipline and knowledge of its internal rules from the user.

The key takeaway from this analysis goes even deeper. The author identifies the root of the complexity in a fundamental design decision: the attempt to unify two distinct concurrency patterns under one API. One is the heterogeneous fan-out model—where all tasks must succeed, for example fetching data from multiple services to aggregate the results. The other is the “first success wins” model—where a single successful result is enough, such as querying multiple database replicas and taking the first response. Attempting to create one universal tool for both scenarios, in his view, has led to unnecessary complication.

As a solution, the author proposes that the core Structured Concurrency API focus solely on the dominant case - “all must succeed” (estimated to cover over 90% of use cases). The rarer “race” scenario could be handled by a separate, dedicated method or left to library implementations. Such a split could result in a much simpler, safer, and easier-to-use API for the vast majority of developers.

This public debate offers an interesting insight into Java’s evolution (which usually takes place in a fairly closed-off way on the aforementioned mailing lists - worth following, by the way). This evolution is not a monologue from Oracle but a dialogue with the community - one that requires many, many iterations to arrive at something truly usable for developers. Structured Concurrency is no exception.

Java has gone through several such evolutions. If you appreciate history, there’s been a particularly interesting article recently on the history of Java as a whole. History of Java: evolution, legal battles with Microsoft, Mars exploration, Spring, Gradle and Maven, IDEA and Eclipse from PVS-Studio is a fascinating journey through the key, often lesser-known moments that shaped the Java we know today. It shows that the language’s success is not only a matter of technology but also of business strategy, legal battles, and - most importantly - the strength of its ecosystem.

I especially liked the focus on specific aspects. One key moment in the platform’s history was the so-called “browser wars” and legal battle with Microsoft. The Redmond giant bundled Windows 98 with its own, incompatible JVM, breaking the sacred “write once, run anywhere” rule by omitting critical APIs (like RMI) and adding its own Windows-specific extensions. The lawsuit won by Sun Microsystems was a milestone that protected Java from fragmentation and preserved its cross-platform identity. Buying Java by Oracle also was not the destructive step a lot of people expected it to be.

The article also highlights the huge role the community and third-party tools have played in Java’s evolution. The creation of the Spring framework by Rod Johnson - as a much simpler and more flexible alternative to the heavy J2EE standard - was a real revolution. Similarly, the emergence of powerful, intelligent IDEs like IntelliJ IDEA and Eclipse forever changed developer productivity and comfort.

This history teaches us that the success of a technology platform is a complex… story. It’s not just about code and features, but also about the people, companies, conflicts, and collaborations that together create a living, breathing ecosystem. So if you ever wonder why I so often try to sneak in behind-the-scenes stories into this technical newsletter… now you know 😊

Since we’re already on the topic of Java’s history and evolution, let’s take a last detour before the end - into object-oriented programming itself. I recently came across a fascinating publication that challenges conventional OOP teaching methods and asks some uncomfortable questions about whether we’re truly preparing beginners for real-world programming challenges. Rethinking Object-Oriented Programming in Java Education by Max X.Z. is a provocative reflection, not so much about OOP itself as about how we teach it. The author argues that the traditional pedagogical approach - where beginners are bombarded with abstract concepts from day one - is deeply flawed.

The main criticism is the introduction of concepts like “class” and “object” through circular, meaningless definitions for a novice (“an object is an instance of a class”). Similarly, the standard boilerplate public class Main { public static void main(String args) { ... } } forces students to blindly retype keywords (public, static) whose meaning cannot be meaningfully explained at that stage. This teaching model, the author argues, promotes rote memorization and dogmatic following rather than critical thinking and understanding why certain constructs and patterns exist in the first place. Interestingly, the Java platform itself has already recognized this problem. There are ongoing efforts to simplify the “first contact” experience with the language, such as JEP 445: Unnamed Classes and Instance Main Methods.

The alternative he proposes is a problem-driven, iterative approach. Instead of teaching “just in case,” new language concepts are introduced just-in-time - exactly when they become the solution to a real, tangible problem. Learning starts with simple imperative code: variables, loops, and conditionals. Students can then write repetitive code, and when the pain of copy-pasting becomes acute, it’s time to introduce methods as a tool for solving repetition - procedural abstraction.

Next comes an assignment that requires managing multiple related pieces of data, like a user’s first name, last name, and age. Once passing numerous variables around becomes unwieldy, classes are introduced - initially as simple data containers without methods - to group them, a form of data abstraction. Only when both concepts are well understood are they combined into full-fledged object classes, where functionality is inseparable from the data, and the this keyword emerges naturally as a consequence of this union.

And then, in the next step, we can migrate from the “kingdom of nouns” to the “kingdom of verbs” - and for those who don’t know what I’m talking about, I recommend Steve Yegge classic post.

P.S. If you want to be even closer to the source when it comes to language-related updates, I recommend checking out the competition: inside.java has started publishing its own newsletter, where the Java Developer Relations team shares all official announcements as well as, for example, news about new JUGs or book releases. I’m not suggesting you should abandon this newsletter (please don’t do that), but I figured it was worth sharing the link.