Best of Foojay.io June 2024 Edition – JVM Weekly vol. 87

A month has passed, and it’s time for another review of Foojay articles.

A month ago, I announced that JVM Weekly joined the Foojay.io family. Foojay.io is a dynamic, community-driven platform for OpenJDK users, primarily Java and Kotlin enthusiasts. As a hub for “Friends of OpenJDK,” Foojay.io gathers a rich collection of articles written by industry experts and active community members, providing valuable insights into the latest trends, tools, and practices in the OpenJDK ecosystem.

Instead of selecting specific articles and dedicating entire sections to them in the weekly newsletter, I focus once a month on choosing a few interesting articles that might be useful or at least broaden your horizons by presenting intriguing practices or tools.

Foojay JCON Report

We’ll start again with the Foojay Podcast, or multiple episodes of this. During the JCON conference, Frank Delporte conducted a series of interviews with interesting people from the community, which he compiled into four episodes:

• Markus Kett & Richard Fichtner discussing the JCON conference itself

• Geertjan Wielenga explaining what Foojay.io is

• Jonathan Vila talking about Sonar

• Soham Dasgupta & Mary Grygleski discussing Generative AI

• Mohammed Aboullaite on Java, Machine Learning, and training models

• Simon de Groot and Richelle Bussenius describing Masters Of Java - The annual code challenge for Java Developers of all levels

• Karl Heinz Marbaise and Steve Poole with insights on Sonatype, Maven, and SBOM

• Miro Wengner talks about Disciplined Engineering

• Marit van Dijk discusses IntelliJ IDEA, reading code, and AI Assistant

• Hinse ter Schuur talks about being a sustainable developer

• Otavio Santana talks about the persistence layer and evolving your career through open-source.

• Arjan Tijms on which version of Java to use.

• Ondro Mihalyi on creating small Java applications for Edge devices.

• Buhake Sindi comparing Jakarta EE to other frameworks and highlighting the Java community in South Africa.

• Patrick Baumgartner talks about messaging via Telegram.

• Gerrit Grunwald on garbage collectors, Intelligence Cloud, and identifying which of your code is actually used in production.

• Balkrishna Rawool on structured concurrency, virtual threads, and upcoming features in future Java releases.

• Piotr Przybyl on Test Containers, ToxiProxy, and testing applications in environments similar to production.

• François Martin on flaky tests, handling waits in unit tests, user interface tests, and reproducing flaky tests.

• Annelore Egger on volunteering at JCON.

As you can see, there’s a lot, but I’ve always liked the “talking heads” format, and I think it’s nice to see who’s who in the community.

And now, time for the main part:

Who instruments the instrumenters?

We’ll start this edition with Who instruments the instrumenters? by Johannes Bechberger. The article introduces the concept of Java code instrumentation, explaining how libraries like Spring and Mockito modify code in real-time to implement advanced features. Readers can learn about the meta-agent, which instruments other instrumenting agents, allowing dynamic analysis of bytecode modifications. The agent wraps all instances of ClassFileTransformer (an interface that allows bytecode modification during JVM loading) with a wrapper that logs bytecode differences (and uses the Vineflower tool for decompilation) to detect differences between the original and transformed bytecode.

The article also details proxy techniques used by Spring for class and interface instrumentation, such as java.lang.reflect.Proxy and CGLIB. Examples show how Spring generates and modifies objects in real-time, and analyzing the generated bytecode helps understand optimizations and potential issues, like excessive method calls or exception handling quirks.

The article doesn’t end with theory; Johannes Bechberger and Mikaël Francoeur demonstrate how bytecode analysis helped them identify and fix bugs and propose optimizations. Readers get a handy set of instructions for downloading, installing, and using the meta-agent in practice, making it easier to understand the real changes tools introduce to their code.

The TornadoVM Programming Model Explained

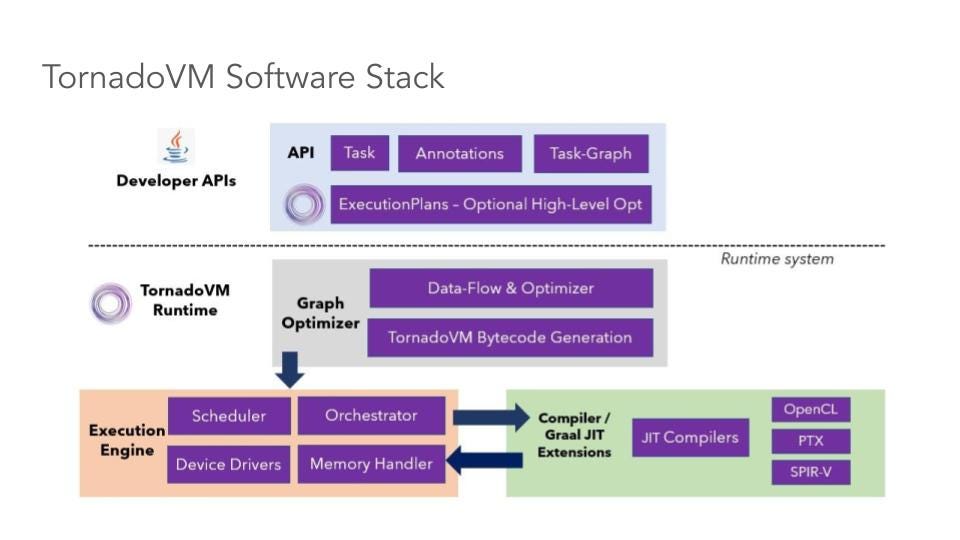

Next, we’ll change topics to my personal favorite - The TornadoVM Programming Model Explained by Juan Fumero. It introduces the TornadoVM programming model and explains how this platform allows developers to automatically run programs on heterogeneous hardware (like GPU, CPU, and FPGA). TornadoVM extends the Graal compiler with a new backend for OpenCL, enabling JVM applications to be ported to various hardware accelerators and dynamically migrate tasks between different architectures and devices without restarting the application.

For example, the TornadoVM programming model uses annotations like @Parallel to help the compiler identify code sections for parallel execution. These annotations can be used in loops without data dependencies, similar to programming models known from OpenMP (an API for parallel programming in C, C++, and Fortran on shared memory platforms) and OpenACC (a set of compiler directives designed to simplify programming for heterogeneous systems with accelerators such as GPUs). Additionally, TornadoVM offers a Task-Graph API for defining tasks and managing data migration between the host and accelerator, crucial in environments where memory is not shared between CPU and GPU.

An example in The TornadoVM Programming Model Explained uses TornadoVM for accelerating image blur filters. Using the @Parallel annotation in loops operating on image pixels, developers can achieve significant computation speedups by leveraging the GPU.

Tests showed that for certain workloads (hopefully not migrating your Spring apps to Tornado), using TornadoVM on various platforms (from integrated Intel graphics to dedicated NVIDIA GPUs) can significantly increase performance compared to standard CPU-based implementations. Additionally, TornadoVM provides a convenient jdbc-like abstraction, allowing developers to utilize different accelerators without changing the application’s source code.

Getting Started with JobRunr: Powerful Background Job Processing Library

JobRunr is an open-source Java library for scheduling tasks and processing background jobs in a distributed environment. It enables background task execution with simple lambda expressions. JobRunr analyzes lambdas and stores metadata needed for task processing in a database (both RDBMS and NoSQL). With integration into popular frameworks like Spring Boot, Quarkus, and Micronaut, JobRunr stands out for its simplicity and extensive capabilities. JobRunr also offers distributed processing and built-in monitoring, making it an attractive choice for developers.

In Getting Started with JobRunr: Powerful Background Job Processing Library authors Ronald Dehuysser, Donata Petkeviciute, and Ismaila Abdoulahi describe the project using the example of an order fulfillment system. This system required running many asynchronous tasks, such as sending order confirmations, notifying the warehouse, and initiating shipping, often in the background.

The article presents how JobRunr can be used to create solutions. The library enables easy creation of asynchronous and periodic tasks using simple lambdas and annotations like @Job and @Recurring. Automatic task retries in case of failures and a built-in dashboard for task monitoring made managing the order fulfillment process much easier, with the ability to scale and process tasks in a distributed manner allowing for efficient resource management and operational continuity.

Indexing all of Wikipedia, on a laptop

A few years ago, the Internet joked about how Chuck Norris could supposedly save the entire web on floppy disks. Recently, I’ve been thinking about local language models like Lama, which can be used without internet access, as a similar concept. It might be a bit of a stretch, but what about having at least a fully local version of Wikipedia? This is an idea behind Jonathan Ellis article, Indexing all of Wikipedia, on a laptop.

In May, Cohere (a company I wrote about recently in the context of integration with langchain4j) published a dataset containing the entire Wikipedia, split into fragments and embedded in vectors using their multilingual-v3 model. Calculating this many embeddings yourself costs about $5000, so the public release of this dataset enables individuals to create a “semantic vector index of Wikipedia” - essentially a searchable local version.

Vector databases are recently associated mainly with collaboration with LLMs, but their applications go beyond that. They allow efficient processing and comparison of large datasets by representing data in vector form. Besides natural language processing and being a dataset for RAG, vector databases are used in image analysis, recommendation systems, and information retrieval. In the article, Jonathan Ellis, CTO of DataStax, describes how his library JVector (for managing vector representations of data) can be used to index data larger than RAM, making indexing the entire English Wikipedia on a laptop a practical reality.

The main challenge in indexing large datasets is RAM limitations since standard vector databases require storing full vectors and edge lists in memory during index construction. The JVector library, used in DataStax Astra, solves this problem by using compressed vectors, allowing the indexing of large datasets like Wikipedia on a home laptop. Requirements include Linux or MacOS, 180 GB of free space for the dataset, 90 GB for the completed index, and disabling swap for performance optimization. The article also includes technical details on compression and index construction and performance search tips.

Suppose you want to learn more about JVector - in that case, I highly recommend the podcast High-Performance Java, Or How JVector Happened, where Jonathan Ellis and Adam Bien discuss the project’s history and why it was initiated. It will also be interesting for those who want to better understand the role of Apache Cassandra in today’s IT ecosystem.

Exploring New Features in JDK 23: Simplifying Java with Primitive Type Patterns with JEP 455

Finally, something practical - a presentation of one of the new JEPs from last week’s JDK 23 preview - JEP 455: Primitive Types in Patterns, instanceof, and switch.

For readers of this newsletter, it shouldn’t be surprising that Java continues to evolve, introducing features that simplify coding practices and improve code readability. JEP 455 is one such proposal that enriches the switch statement, making it more versatile and expressive - allowing primitive types (like int, long, boolean) to be used directly in pattern constructs, eliminating the need for unnecessary boxing/autoboxing.

A N M Bazlur Rahman’s article Exploring New Features in JDK 23: Simplifying Java with Primitive Type Patterns with JEP 455 shows how JEP 455 can be applied in an order processing system that distinguishes between logged-in and unidentified users. It creates a User object with an identifier and a loggedIn status, and the switch expression evaluates whether the user is logged in. The startProcessing method then uses another switch expression to handle different OrderStatus values, printing appropriate messages based on the order status.

We’ll return to Foojay.io articles next month, and in the meantime, I have enough material for at least two more editions… So next week, a summary of Spring I/O and some interesting announcements and events in the community.

Great news, great issue :)