August: The Rest of the Story - JVM Weekly vol. 143

The new edition of JVM Weekly is here and... and like always, there is a lot of "Rest" in "Rest of the Story".

TLDR: I’ve gathered many interesting links in June that didn’t make it into previous issues. Many aren’t extensive enough for a full section, but they still seem compelling enough to share. And to wrap things up, I’m adding a few (this month - a lot…) of releases and updates.

1. Missed in August

The playlist from this year’s JVM Language Summit is slowly starting to fill up, but this time I’m not jumping the gun like last year - before I really get into the topic, I’ll wait until a larger portion of the recordings appears so I can review them in a broader context. There is one video I can’t help but recommend already to everybody, though: Brian Goetz talk Growing the Java Language.

It’s an excellent look at the process of developing Java as a language, full of surprises about the sources of inspiration for new features. If you didn’t expect one of them to be… Haskell, this session will certainly open your eyes - and show that Java’s evolution is the result of drawing from a truly wide spectrum of ideas.

An update has appeared in the Lombok repository that ensures compatibility with JDK 25 even before the official release of the new Java version. The key change was adapting the project’s “patcher” to handle the new Opcodes.V25, which allows Lombok to correctly recognize and modify code during compilation. This is the first time in the project’s history that it has gotten ahead of a JDK release and offered ready support at the Early Access stage rather than only after the fact. For Lombok, this is quite an achievement, because for years it was a symbol of a constant struggle with compatibility. The tool has always relied on interfering with the internal mechanisms of the javac compiler, which meant that each successive JDK version could turn its behavior upside down. Hence the recurring cycle: a new JDK release, user complaints, pressure on maintainers, and finally a patch restoring functionality.

The fact that Lombok has gotten ahead of events this time can be read as proof of the project’s maturity and an unusual happy ending in its turbulent history.

And now I have an interesting piece for you about large JVM code migrations. In the article Migrating Airbnb’s JVM Monorepo to Bazel by Jack Dai, Howard Ho, Loc Dinh, Stepan Goncharov, Theodore Tenedorio, and Thomas Bao (big project, so a lot of contributors), the authors describe the completion of a 4.5-year process to migrate Airbnb’s largest JVM codebase (containing millions of lines of Java, Kotlin, and Scala) from Gradle to Bazel. The results of the migration include, among other things, major acceleration of local builds and tests (3–5× faster), faster IntelliJ synchronizations (2–3×), and faster deployments to the development environment (also 2–3×), while the Build CSAT (build satisfaction) metric increased from 38% to 68%.

More in the original text 😊

Apache Fory has completed its incubation process and has been promoted to Top-Level Project status within the Apache Software Foundation. Shawna Yanga, the project’s creator, emphasizes that Fory has achieved technical maturity and has adopted lasting principles of open governance consistent with the Apache Way. From December 2023 (entering the incubator as Apache Fury), through the renaming to Fory in May 2025, up to formal graduation on July 17, 2025 - the project underwent a full transformation, gaining extensive community support, regular releases, and the implementation of ASF-compliant practices.

Graduation from the Apache Incubator means that Fory has met all ASF requirements: it has demonstrated transparency (public discussions and votes), regular, policy-compliant releases, and a diverse, self-sustaining community without dependence on a single vendor. The entire path also nicely illustrates the incubation process itself - from a small start to a solid project that grows under Apache’s wing with full autonomy and stability for enterprise users.

In the GPULlama3.java project, based on TornadoVM, support was recently added for new models - Gwen3, Phi-3, Mistral, and Llama 3 in various formats (FP16, Q8, Q4). Thanks to this, users can now run these models on GPUs in pure Java, which opens up new possibilities for accelerating LLM inference.

As a reminder, GPULlama3.java is a TornadoVM project that ports the existing Llama3.java to enable GPU-accelerated inference in pure Java, without the need to use external native libraries. The code runs on the GPU via TornadoVM’s JIT, and the implementation includes support for the GGUF format and for AMD, Apple Silicon, and Intel hardware using OpenCL and PTX.

PS: Juan Fumero, one of the project leads, recently announced that he has joined the Java Platform Group at Oracle - congratulations! For my part, I’m now looking forward to the first traces of TornadoVM in OpenJDK proper.

At KotlinConf 2025, a long-awaited change was announced - rich errors are coming to the language! This is a new, much more idiomatic way to handle errors: instead of using exceptions or wrapping results in Result or Either, a function can now return a specific error type as part of its signature, for example: fun load(): User | NotFound, which clearly conveys the possible return states - success or error.

fun load(): User | NotFound

when (val user = load()) {

is User -> println("Hello, ${user.name}")

is Notfound -> println("Not found!")

}This mechanism is being formalized in the new proposal KEEP-0441: Rich Errors by Michail Zarečenskij and Roman Venediktov which describes the motivation, design, and operation of this innovation. It enables the use of so-called “error union types,” i.e., a set/union of error types, natively in Kotlin without external libraries. The documentation includes details on how to define error classes (e.g., error class ParsingError(val msg: String)), how to declare error aliases, and an encouragement to use strong, typed error handling enforced at the compiler level.

As a bonus, there’s a post on the Kotlin blog, Kotlin on the Backend – What’s New From KotlinConf 2025 by Alina Dolgikh which more broadly discusses backend updates (though without an in-depth focus on rich errors). The article describes strategic cooperation with Spring, the growing use of Kotlin on the server, improvements in Ktor and Exposed, the development of Amper, and reinforces the role of the backend in Kotlin as a solid and promising path.

PS: A new Kotlin survey from JetBrains has also been released—it’s worth filling out, as it’s one of the main channels through which the community can have a real impact on the direction of the language’s development.

And speaking of Kotlin and its features - in his post Approximating Named Arguments in Java, Ethan McCue shows how Java can imitate the named arguments mechanism known from Kotlin or Python. He analyzes various techniques - from the builder pattern, through records with default constructors and “withers,” to classic mutable transfer objects - discussing their advantages, drawbacks, and the amount of boilerplate that still remains to be written. The article is an interesting starting point for reflecting on whether and how such a mechanism might one day make its way into the language itself.

Metals is a language server for Scala, developed under Scalameta and widely used in environments such as VS Code, IntelliJ IDEA, and Neovim. Its role is to provide intelligent hints, code navigation, refactoring, and compiler integration. Thanks to this, working in any editor becomes closer to the experience offered by a full IDE. The novelty is that Metals can now also act as a Model Context Protocol server, which opens up quite interesting possibilities.

In his post An overview of using Claude Code, Metals, and NVIM, Chris Kipp describes his path from skepticism about AI-assisted development to creating an effective flow in the terminal environment of Neovim/tmux. The combination of Claude Code (Anthropic’s AI assistant) with Metals acting here as an MCP server allows Claude Code to perform compilations, run tests, and analyze code with immediate contextual support—without leaving the editor. Thanks to this, with an AI co-author you can work without losing control and with a significant boost in productivity. An interesting case study.

It’s worth taking a look at an excellent piece, Just Be Lazy by Per-Ake Minborg. The article presents JEP 502: Stable Values (Preview) - official support for lazy values, functions, and collections in Java 25. Thanks to this, new APIs such as StableValue.supplier or StableValue.function enable lazy computations that the HotSpot JIT can treat as constants (constant-fold), provided they originate from a static final field and are trusted—which markedly improves performance.

It’s a really good text because it not only presents the new feature but also illustrates its operation in a simple way - showing how to create a logger cache or an SQRT_CACHE function, allowing HotSpot to compile a call down to a constant value. In addition, the author explains why the term “stable” was chosen instead of “lazy,” and the entire piece opens your eyes to how the new mechanism synthesizes flexible initialization with performance and code predictability.

And to wrap up this section, Sharat C. , Director of Java Developer Engagement at Oracle and for years the “face” of the JavaOne conference, shared great news: the event returns to the San Francisco Bay Area on March 17–19, 2026. It’s worth putting that date on your calendar today.

2. Release Radar

Groovy 5.0

I’m a bit sorry to feel obliged to remind this, but Groovy is a dynamic-and-static programming language created as a complement to Java—a more flexible, scripting counterpart that retains Java-familiar syntax while adding elements from languages like Python and Ruby. Its first versions appeared in 2003–2007, and since version 2.0 (2012) it can also be compiled statically, which significantly improved performance and brought it into the mainstream. I still remember when it was the standard language for scripting (where it excelled) and for writing tests (Spock is my favorite testing framework ever 🖖).

In recent years, Groovy hasn’t attracted as much attention as it once did. Its role compared to Java has clearly diminished, and the language’s development has happened more in the background—even though it still underpins solutions like Jenkins (Pipeline), Gradle, and Grails (a framework developed by Graeme Rocher, later the creator of Micronaut). This has led many developers to forget how valuable the language is—especially since new features were rare or less prominent.

Among the novelties in Groovy 5.0 you’ll find repeat, zip, and zipAll methods for working with collections, support for lazy iterators, as well as significantly accelerated operations on arrays - up to 10× faster! In addition, there’s a new AST transform, @OperatorRename, that makes operator overloading easier (yes, Groovy can do that), a refreshed REPL shell (with syntax highlighting, history, and auto-completion), added support for pattern matching in instanceof, and support for JEP-512 (compact source files and instance main methods). Notably, Groovy 5.0 boosts array operation performance by tenfold and introduces improvements for Jakarta EE servlets and Groovlets (Groovy scripts that run like a servlet 🙈), strengthening its capabilities in web applications.

Groovy 5.0 is designed for JDK 11 and newer, which means it continues tight integration with Java at the level of binary compatibility and interoperability (though do take a look at its bytecode sometime - there are plenty of neat solutions in there). The language still allows you to use Java libraries, and Groovy code is compiled to JVM bytecode that interoperates directly with Java code. Additionally, extensions such as extension methods are designed to work smoothly within the Java ecosystem - without breaking existing libraries or forcing new dependencies.

PS: My first article ever was We tried Groovy EE — and what we have learned from it—which is why I have a huge soft spot for Groovy in my heart.

IntelliJ IDEA 2025.2

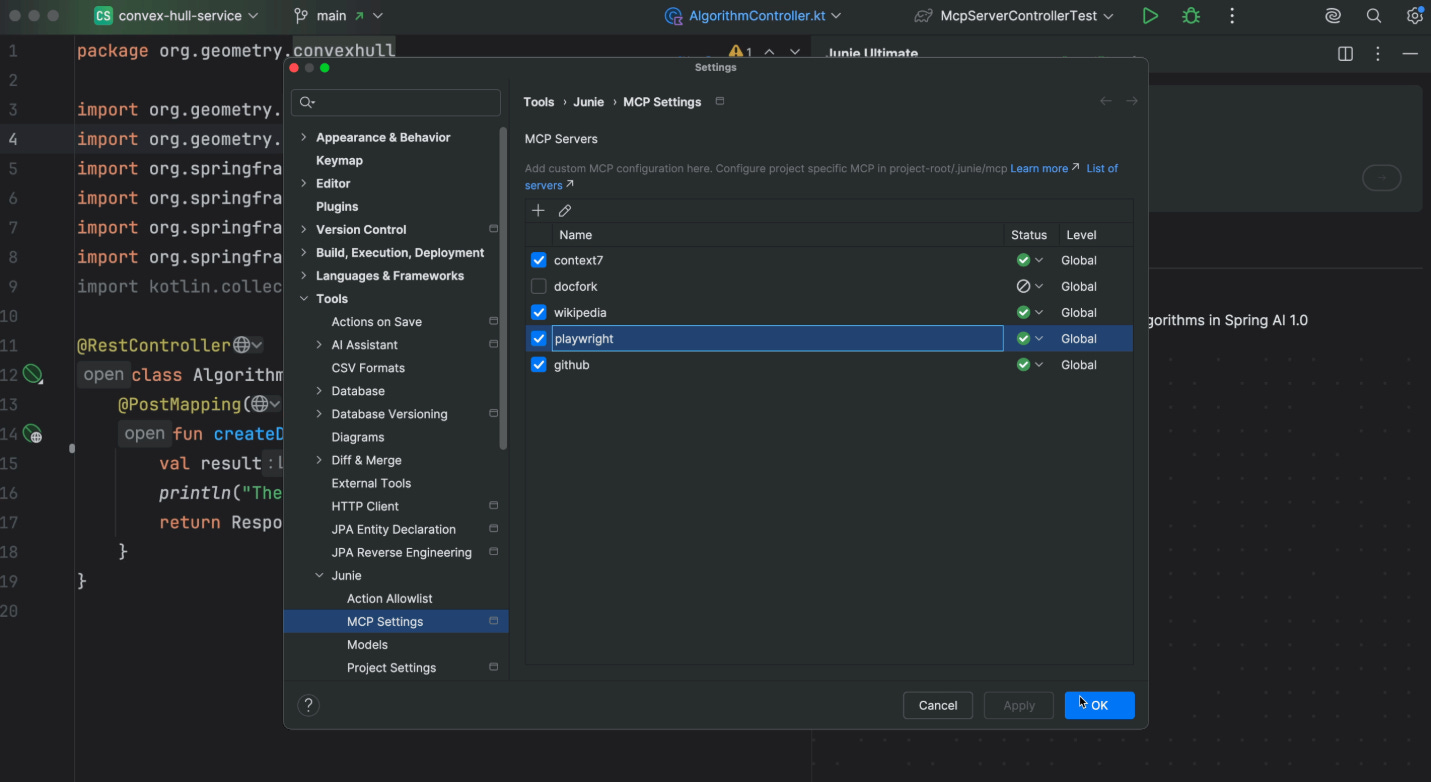

IntelliJ IDEA 2025.2 shows that JetBrains is leaning increasingly hard into AI - you can see it in the release priorities: AI Assistant, Junie, and MCP support are what’s meant to attract users and keep them in the IDE, especially now that aggressive, specialized editors/agent-UIs like Cursor are appearing.

The AI features in 2025.2 are significantly expanded and deserve their own paragraph: Junie received speedups and strong Model Context Protocol support, and the editor itself can now act as an MCP server, providing agents with a full toolkit (compilation, tests, project analysis). This transforms AI from a simple “suggestion engine” into an active assistant that truly understands the project state.

Additionally, AI Assistant offers offline code completion (e.g., for Java, SQL, YAML, JSON, Markdown), the ability to choose a local model, and new mechanisms for configuring project rules (Project Rules), giving teams control over style and generation costs. It’s clear JetBrains is going its own way: it’s no longer just about convenience, but about a trusted, validated execution context for models. Considering their customers aren’t only IndieHackers, that probably makes a lot of sense.

Beyond AI, 2025.2 includes a raft of improvements important for JVM folks: full support for Java 25 (including preview features), virtual-threads debugging, better Spring UX (Spring Debugger, Spring Modulith), and readiness for Maven 4 and JSpecify. At the same time, JetBrains hasn’t forgotten other JVM languages: the Scala Plugin 2025.2 update brings full support for opaque types, new tuple operations, improved understanding of Scala 3.x syntax, and a default layout for new sbt modules.

To wrap up - two items explicitly worth noting: Kotlin Notebook is integrating ever more tightly with the IntelliJ platform, making it easier to prototype plugins and experiment with interactive notebooks in the JetBrains ecosystem (see Kotlin Notebook Meets IntelliJ Platform: Advancing IDE Plugin Development by 🥑 Jakub Chrzanowski for more), and Bazel has reached GA in the official plugin, making Bazel a first-class citizen of the IDE with deep target → module mapping and multi-language support—and as you saw in the earlier Airbnb example, this solution is becoming increasingly popular. You can learn more in Bazel Plugin Release: General Availability by Justin Kaeser.

As usual, you’ll find more in the full release notes. But if you prefer video format, here is a very good introduction from JetBrains Developer Advocacy team:

Gradle 9.0

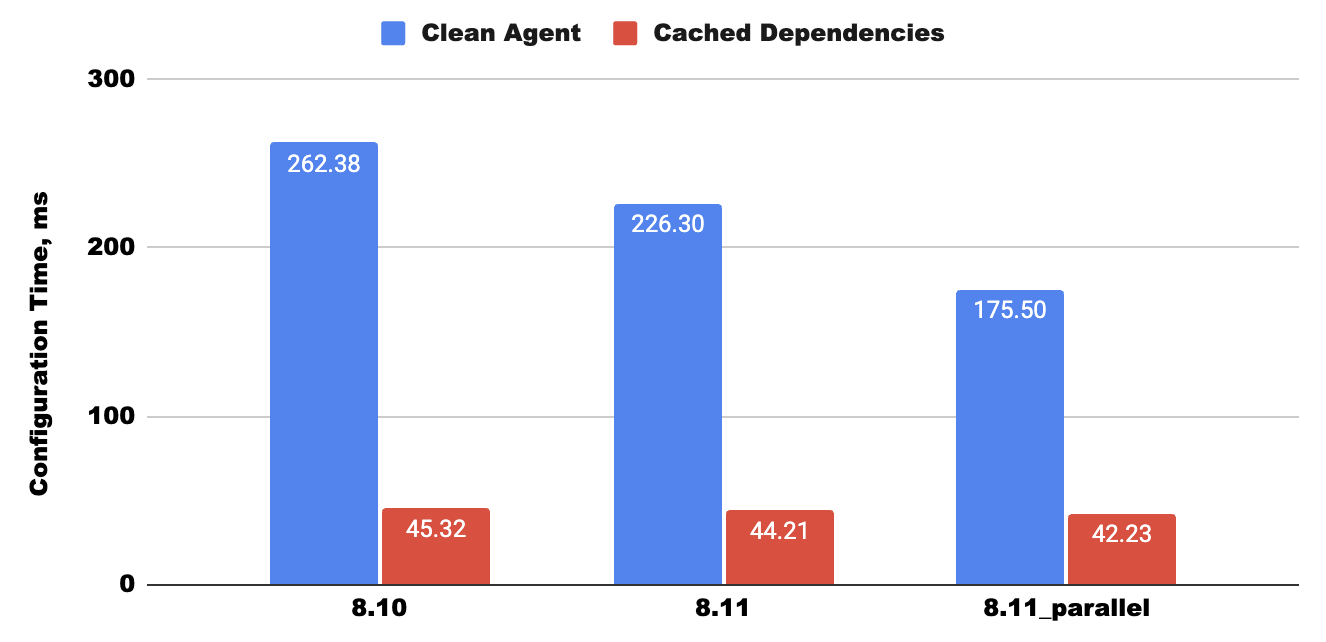

Gradle 9 earns the “big number,” because it changes the paradigm for running builds—Configuration Cache is no longer experimental and becomes the preferred (default) execution mode, with improvements that avoid recompiling Kotlin DSL scripts and reduce memory usage. This isn’t a cosmetic change: it means Gradle is designing subsequent features and deprecations with continuously cached operation in mind, which simplifies the tool’s internal architecture and opens the door to larger changes (e.g., Isolated Projects options).

The practical effect of this decision is real speed-ups and resource savings: the latest Configuration Cache improvements reduce cache size and speed up configuration (in Java/Kotlin/Android scenarios you can see significant time savings - reports mention ~2× faster configuration and even 4× smaller cache sizes in some cases), while also introducing better error reporting, toolchain updates (Kotlin 2, Groovy 4), and raising the minimum required JDK to run Gradle - all of which add up to noticeably better DX for large monorepos (see how that theme keeps coming up) and CI. At the same time, Gradle tightens rules around build logic (detecting implicit inputs and errors incompatible with Configuration Cache), making builds less flexible but more deterministic and correct - though this does require some migration effort from teams.

In practice, this also means a decisive migration phase: new projects will get Configuration Cache enabled by default, Gradle will gently nudge and encourage older builds to switch, and at the same time some old practices will be deprecated or tightened (e.g., changes in the behavior of registering providers and listeners can now cause errors if they don’t meet Configuration Cache requirements—there are transitional flags available during migration).

As usual, you’ll find more in the full release notes.

WildFly 37.0

WildFly 37.0 is primarily a major stabilization release - the project focused on bug fixes and reducing technical debt while delivering full compatibility with Jakarta EE 10 and MicroProfile 7.0. The server recommends Java SE 21 (though it still works well on SE 17), which in practice means WildFly 37 is ready to run on newer JDK LTS.

Among the smaller but important improvements is a new property in the messaging-activemq subsystem - scale-down-commit-interval - which lets you control the batch size of messages sent during scale-down and helps prevent OutOfMemoryError during message migration between nodes. The release also notes efforts related to the project moving under the Commonhaus sponsor and migrating repositories to Nexus 3, which was a major operational effort by the WildFly team.

In parallel with classic WildFly, the team continues work on WildFly Preview - a channel where elements related to Jakarta EE 11 (including the Core Profile) are tested so they can later be included in the standard release.

Hibernate ORM 7.1

Hibernate ORM 7.1 introduces several important yet carefully designed improvements—the most significant are managed-resource discovery in SE environments, allowing the JPA provider to discover managed entities in a simpler, specification-aligned way; an expanded and streamlined API for pessimistic locking (new org.hibernate.Locking, Locking.Scope, Locking.FollowOn, and org.hibernate.Timeouts); and the ability to intercept events related to Session#merge via an Interceptor.

There are also practical enhancements - better DDL (automatic check constraints for certain inheritance hierarchies) and JSON optimizations for Oracle 21+. New SPI extensions related to locking were added (e.g., LockingSupport, LockingClauseStrategy) and small but important SQL behaviors (WAIT/NO WAIT/SKIP LOCKED for H2). For this reason, it’s worth reviewing the official Migration Guide and testing critical blocking/lock scenarios and bytecode-enhancement mechanisms before updating production.

Alongside classic ORM, the Hibernate ecosystem also offers Hibernate Reactive - an API designed for non-blocking operation with database clients compatible with Vert.x, well-suited for use in Quarkus or other reactive runtimes; if you’re migrating to reactive architectures, it’s worth looking into this path, as it lets you share entities with the traditional ORM and offers APIs based on both CompletionStage and Mutiny Uni.

Meanwhile, on August 8, 2025, Hibernate Search 8.1 was released - an update that expands aggregation capabilities in the DSL (including composite aggregations and aggregations returning values of different types), updates Lucene backends and Elasticsearch/OpenSearch clients, and aligns with the ORM 7.1 line, making it a natural choice when you need full-text search and advanced analytics in JVM applications.

LangChain4j 1.3.0

LangChain4j 1.3.0 is above all a step toward “agentic” applications - the release introduces two experimental modules: langchain4j-agentic and langchain4j-agentic-a2a, which provide abstractions and tools for building agentic, AI-driven workflows. This signals that the library is evolving from a simple LLM client toward more complex agent orchestration.

Beyond that, the changelog includes a series of practical fixes and additions: documentation for the Infinispan Embedded Store, bug fixes (including those related to joins in Infinispan and output guardrails), dependency updates (e.g., Couchbase client), deprecation of the old vector API in favor of withFloatVectors, and minor documentation and test improvements.

You’ll find the full changelog, links to docs, and implementation details in the release notes—it’s worth a look before upgrading, especially if you use Infinispan/Couchbase or plan to experiment with agents.

PS: This release also saw new contributors - a good sign of the project’s health.

3. Github All-Stars

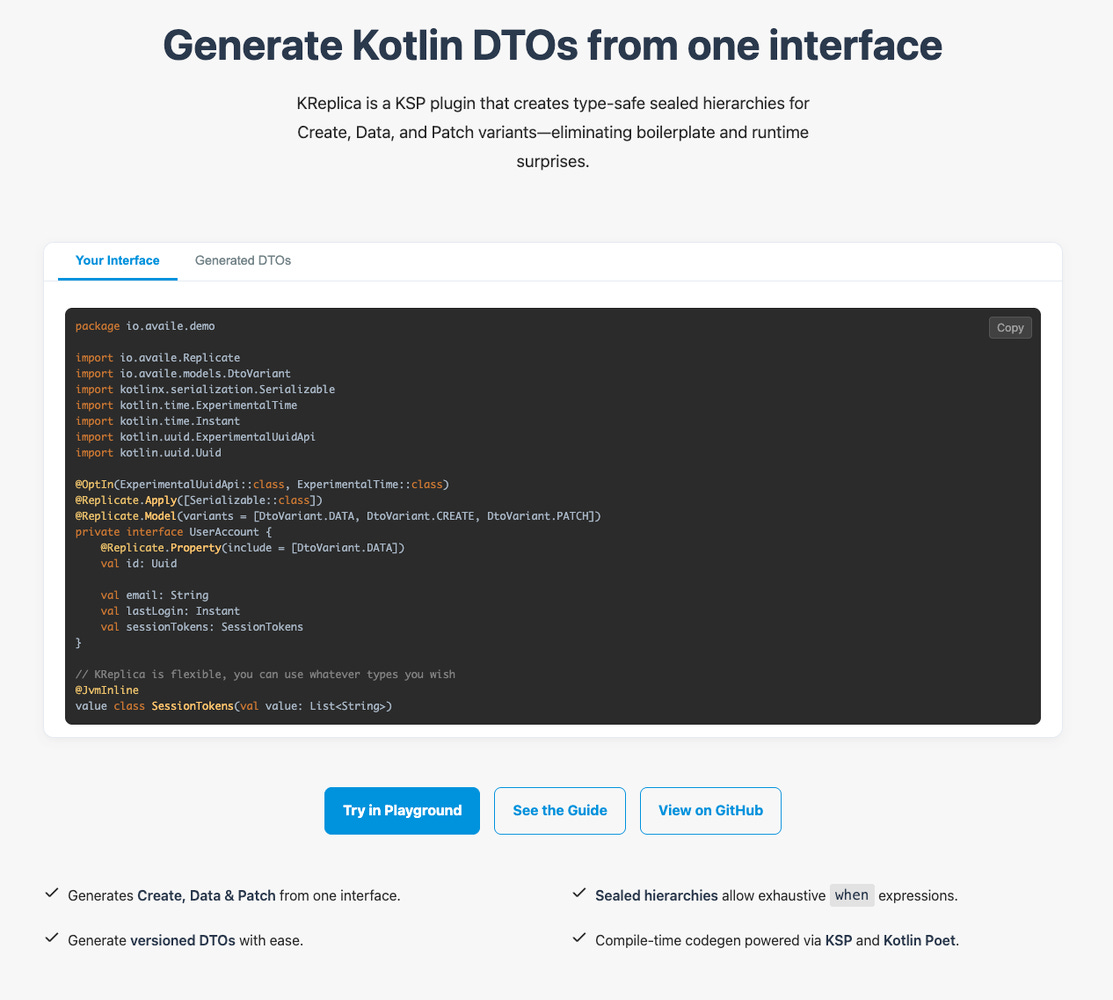

KReplica

KReplica is a lightweight plugin based on Kotlin Symbol Processing (KSP), designed to eliminate boilerplate related to DTOs and mapping between application layers. You install it as a KSP processor, and during compilation it generates mapping code/adapters for your data classes—resulting in fewer hand-written converters, fewer errors, and faster iterations. More information and a demo can be found on the project page: kreplica.availe.io.

The mechanism that makes KReplica particularly interesting is the use of sealed hierarchies to model API versions/variants: the generated code and mapping mechanisms are designed so that the compiler enforces handling all cases. If you add a new field or a new API version and forget to update the mappings—the compilation will fail instead of allowing silent, runtime glitches. The effect: more “bulletproof” APIs, fewer regressions when evolving contracts, and better DX for teams maintaining inter-service contracts. If you want—I can prepare a short example (code + KSP configuration) showing a typical workflow with KReplica.

javadocs.dev/mcp

javadocs.dev is a simple, open-source service by James Ward that fetches and serves Javadocs from artifacts available in Maven Central in a convenient, browser-based form - you enter groupId/artifactId/version and get ready-made documentation (HTML) for a given library version. The service acts as a lightweight front end to the javadoc.jar and makes it easy to quickly browse APIs without downloading entire artifacts locally.

The fresh MCP release for javadocs.dev is a simple but clever move - James launched a lightweight MCP server that lets AI assistants fetch official Javadocs from artifacts in Maven Central in a streaming manner. Instead of manually searching the docs or relying on a model’s (often outdated) memory, an MCP agent can query javadocs.dev/mcp for a class description, method signatures, or API examples and receive a structured response.

jdtfmt

jdtfmt by Benjamin Marwell aspires to be gofmt for Java - a simple, non-configurable (by default), blisteringly fast source formatter you can drop into CI and developer workflows without mercy, because it always produces a predictable, idempotent result.

jdtfmt is an opinionated, Eclipse-JDT-based CLI formatter whose goals (no default config, idempotence, preservation of comments and intentional blank lines, and fast native binaries) map exactly to the gofmt idea - one well-defined style that removes style debates and makes automation trivial. Technically it uses Eclipse JDT (Java Development Tools - set of tools and libraries that form the “Java engine” in the Eclipse ecosystem) for formatting, PicoCLI for command-line parsing, and GraalVM to build native, statically linked binaries, which yields a big speedup compared to running the JAR.

The project is still in its early stages , but the concept is clear and practical: if you like the ergonomics and conventions that came out of the Go ecosystem, jdtfmt aims to be the same for Java. It’s worth trying in a local repo or CI, especially if you want fast, deterministic formatting with minimal configuration overhead.

Cause this is the most interesting paradox in programming:

Destination-FAANG

Finally- for everyone looking for a job - I’ve got a small surprise: the Destination-FAANG-Java-Solution repository - a collection of solutions to popular LeetCode problems written in Java (implementations + test files). The repo is popular in the community and worth browsing as a quick exercise bank before interviews.

Take a look if you want to practice the classics (Two Sum, Binary Tree, LRU Cache, Longest Subsequence, and hundreds more), compare approaches, or quickly find ready-made implementations for testing and learning—though these days most people probably use ChatGPT for that 😁.

PS: quick reminder from last week:

Two QCon events worth your time (and discounts):

QCon San Francisco (November 17-21, 2025) Deep, practitioner talks across architecture, data, and engineering leadership. Use code JVMSF100 for $100 off.

QCon AI New York (December 16-17, 2025) Practical, production-grade AI for the SDLC. Use code JVMAI35 for $35 off.

YouTube is great, but the hallway track is where the magic happens. Pick the broad SF deep-dive, the focused AI NYC, or… be bold and do both. 😊